{

"llmModels": [

{

"provider": "Other", // 模型提供商,主要用于分类展示,目前已经内置提供商包括:https://github.com/labring/FastGPT/blob/main/packages/global/core/ai/provider.ts, 可 pr 提供新的提供商,或直接填写 Other

"model": "Qwen/Qwen2.5-72B-Instruct", // 模型名(对应OneAPI中渠道的模型名)

"name": "Qwen2.5-72B-Instruct", // 模型别名

"maxContext": 32000, // 最大上下文

"maxResponse": 4000, // 最大回复

"quoteMaxToken": 30000, // 最大引用内容

"maxTemperature": 1, // 最大温度

"charsPointsPrice": 0, // n积分/1k token(商业版)

"censor": false, // 是否开启敏感校验(商业版)

"vision": false, // 是否支持图片输入

"datasetProcess": true, // 是否设置为文本理解模型(QA),务必保证至少有一个为true,否则知识库会报错

"usedInClassify": true, // 是否用于问题分类(务必保证至少有一个为true)

"usedInExtractFields": true, // 是否用于内容提取(务必保证至少有一个为true)

"usedInToolCall": true, // 是否用于工具调用(务必保证至少有一个为true)

"usedInQueryExtension": true, // 是否用于问题优化(务必保证至少有一个为true)

"toolChoice": true, // 是否支持工具选择(分类,内容提取,工具调用会用到。)

"functionCall": false, // 是否支持函数调用(分类,内容提取,工具调用会用到。会优先使用 toolChoice,如果为false,则使用 functionCall,如果仍为 false,则使用提示词模式)

"customCQPrompt": "", // 自定义文本分类提示词(不支持工具和函数调用的模型

"customExtractPrompt": "", // 自定义内容提取提示词

"defaultSystemChatPrompt": "", // 对话默认携带的系统提示词

"defaultConfig": {}, // 请求API时,挟带一些默认配置(比如 GLM4 的 top_p)

"fieldMap": {} // 字段映射(o1 模型需要把 max_tokens 映射为 max_completion_tokens)

},

{

"provider": "Other",

"model": "Qwen/Qwen2-VL-72B-Instruct",

"name": "Qwen2-VL-72B-Instruct",

"maxContext": 32000,

"maxResponse": 4000,

"quoteMaxToken": 30000,

"maxTemperature": 1,

"charsPointsPrice": 0,

"censor": false,

"vision": true,

"datasetProcess": false,

"usedInClassify": false,

"usedInExtractFields": false,

"usedInToolCall": false,

"usedInQueryExtension": false,

"toolChoice": false,

"functionCall": false,

"customCQPrompt": "",

"customExtractPrompt": "",

"defaultSystemChatPrompt": "",

"defaultConfig": {}

}

],

"vectorModels": [

{

"provider": "Other",

"model": "Pro/BAAI/bge-m3",

"name": "Pro/BAAI/bge-m3",

"charsPointsPrice": 0,

"defaultToken": 512,

"maxToken": 5000,

"weight": 100

}

],

"reRankModels": [

{

"model": "BAAI/bge-reranker-v2-m3", // 这里的model需要对应 siliconflow 的模型名

"name": "BAAI/bge-reranker-v2-m3",

"requestUrl": "https://api.siliconflow.cn/v1/rerank",

"requestAuth": "siliconflow 上申请的 key"

}

],

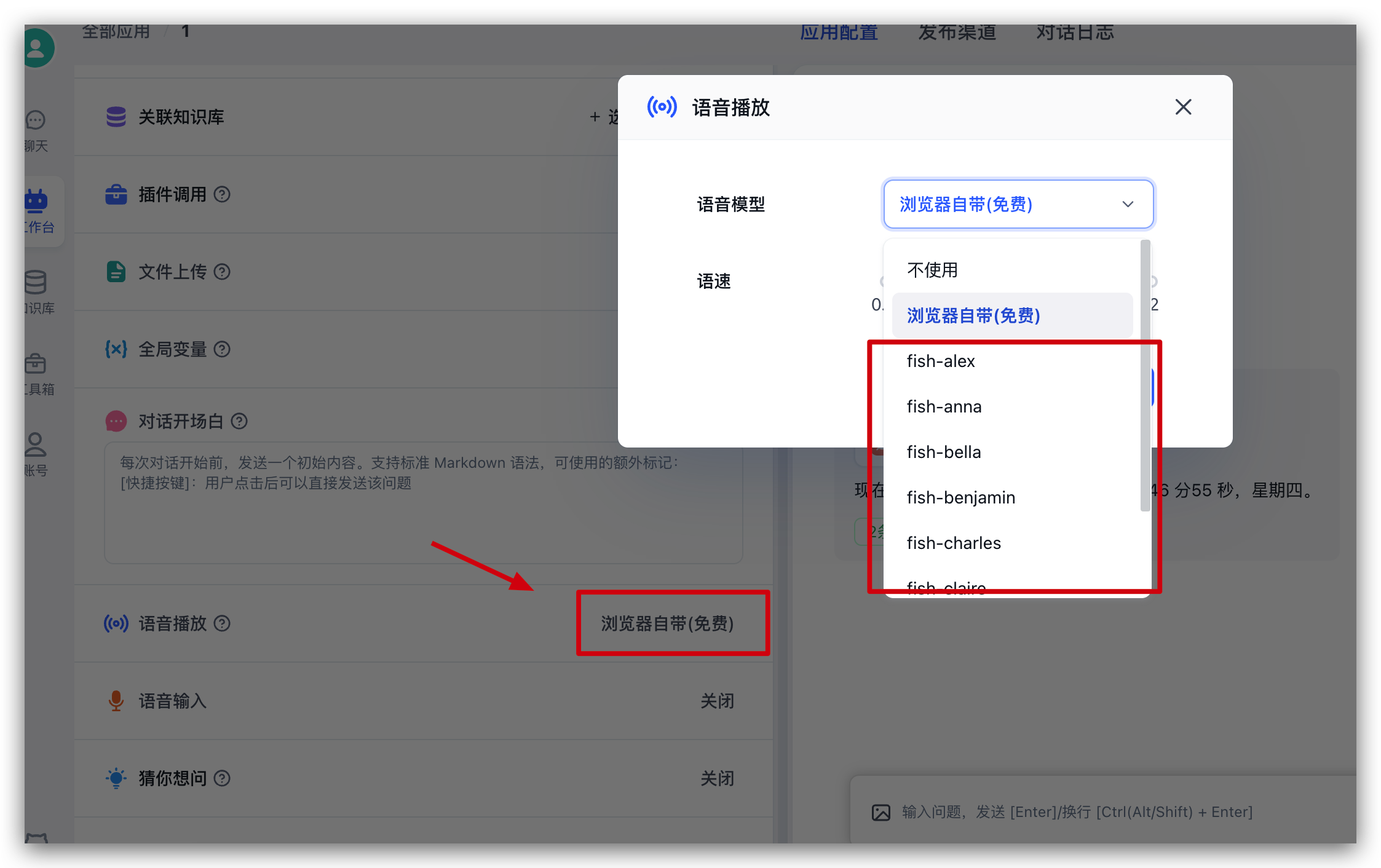

"audioSpeechModels": [

{

"model": "fishaudio/fish-speech-1.5",

"name": "fish-speech-1.5",

"voices": [

{

"label": "fish-alex",

"value": "fishaudio/fish-speech-1.5:alex",

"bufferId": "fish-alex"

},

{

"label": "fish-anna",

"value": "fishaudio/fish-speech-1.5:anna",

"bufferId": "fish-anna"

},

{

"label": "fish-bella",

"value": "fishaudio/fish-speech-1.5:bella",

"bufferId": "fish-bella"

},

{

"label": "fish-benjamin",

"value": "fishaudio/fish-speech-1.5:benjamin",

"bufferId": "fish-benjamin"

},

{

"label": "fish-charles",

"value": "fishaudio/fish-speech-1.5:charles",

"bufferId": "fish-charles"

},

{

"label": "fish-claire",

"value": "fishaudio/fish-speech-1.5:claire",

"bufferId": "fish-claire"

},

{

"label": "fish-david",

"value": "fishaudio/fish-speech-1.5:david",

"bufferId": "fish-david"

},

{

"label": "fish-diana",

"value": "fishaudio/fish-speech-1.5:diana",

"bufferId": "fish-diana"

}

]

}

],

"whisperModel": {

"model": "FunAudioLLM/SenseVoiceSmall",

"name": "SenseVoiceSmall",

"charsPointsPrice": 0

}

}

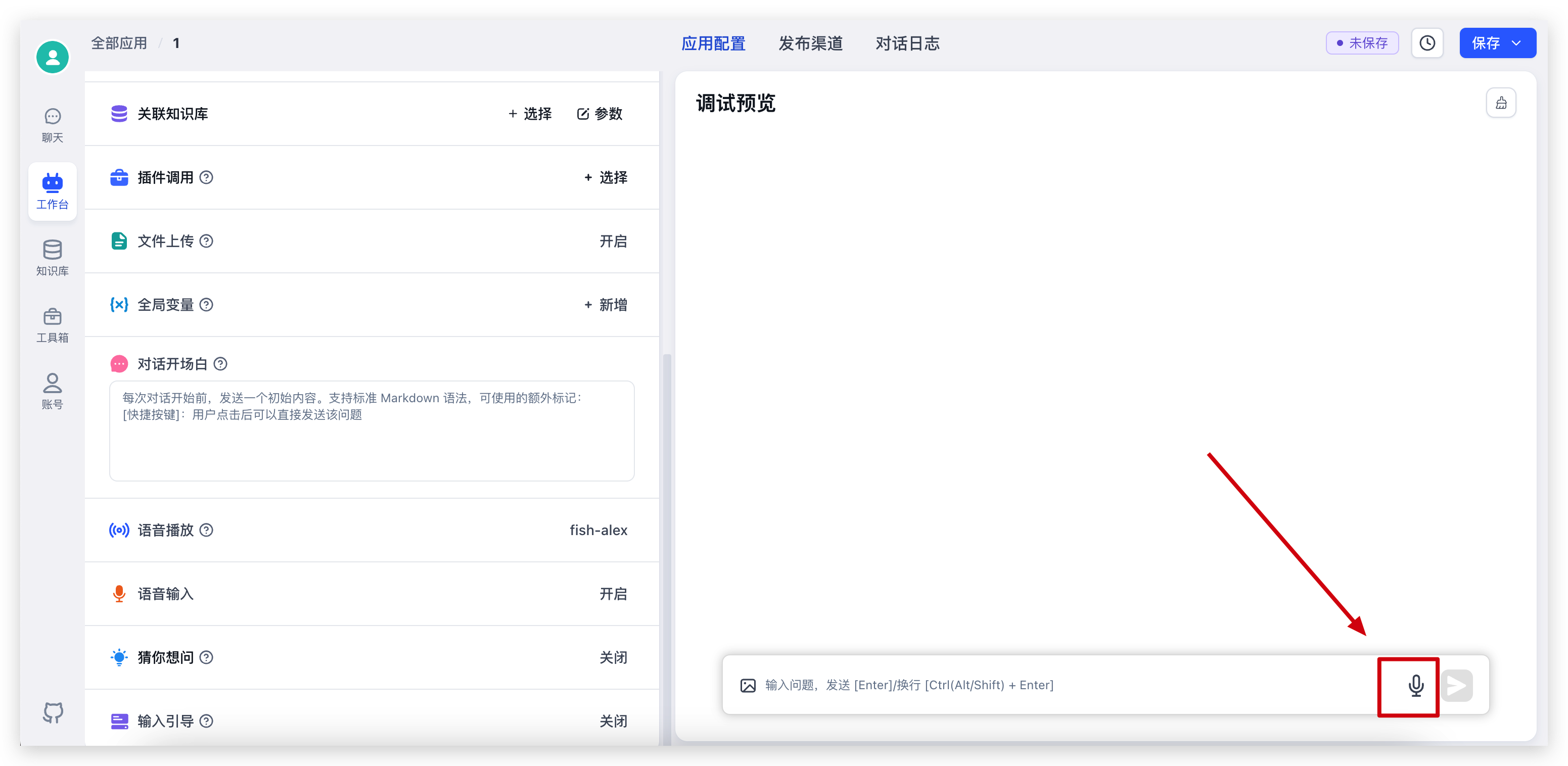

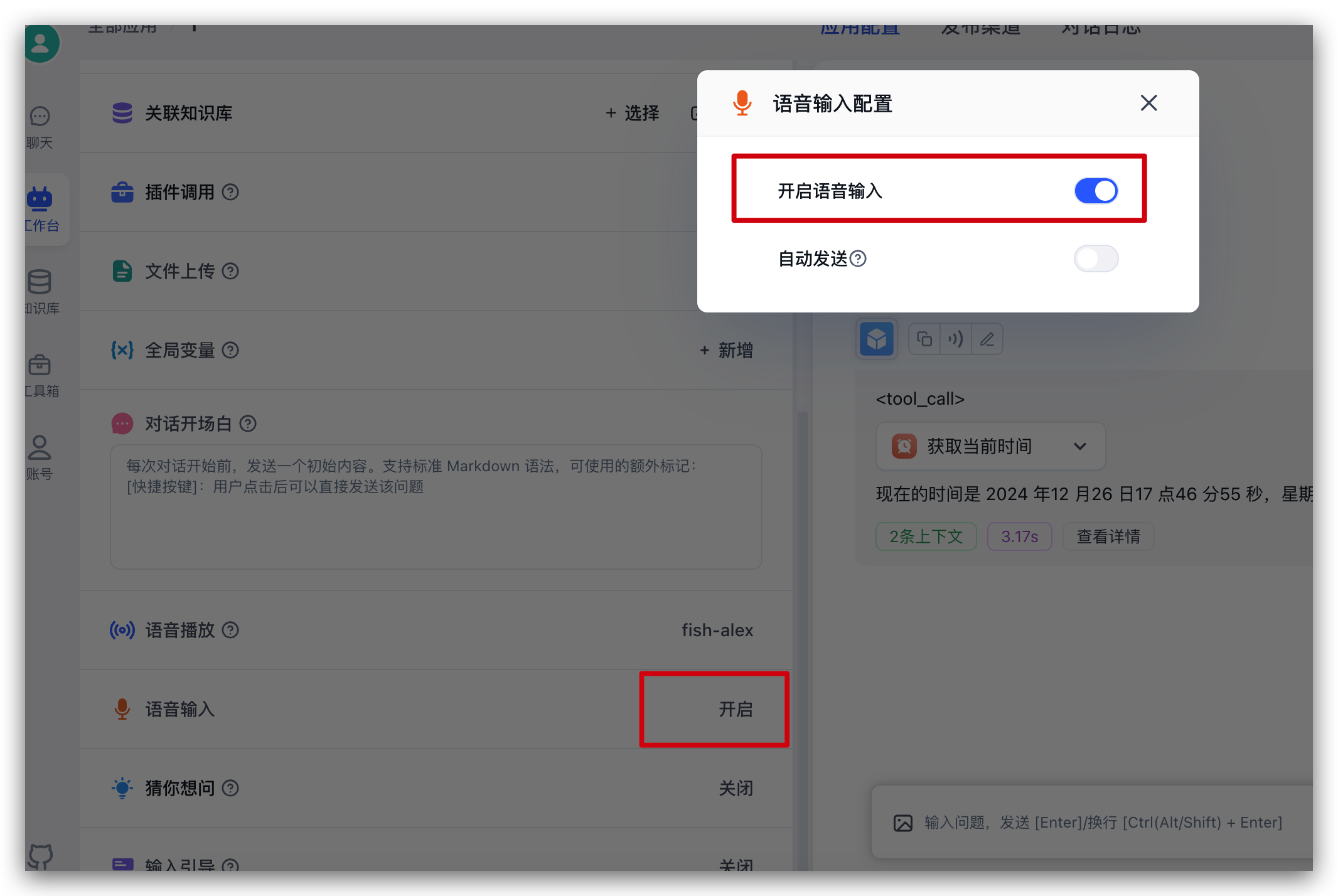

开启后,对话输入框中,会增加一个话筒的图标,点击可进行语音输入:

开启后,对话输入框中,会增加一个话筒的图标,点击可进行语音输入: