# 创建语音转文本请求

Source: https://docs.siliconflow.cn/cn/api-reference/audio/create-audio-transcriptions

post /audio/transcriptions

Creates an audio transcription.

# 创建文本转语音请求

Source: https://docs.siliconflow.cn/cn/api-reference/audio/create-speech

post /audio/speech

Generate audio from input text. The data generated by the interface is the binary data of the audio, which requires the user to handle it themselves. Reference:https://docs.siliconflow.cn/capabilities/text-to-speech#5

# 删除参考音频

Source: https://docs.siliconflow.cn/cn/api-reference/audio/delete-voice

post /audio/voice/deletions

Delete user-defined voice style

# 上传参考音频

Source: https://docs.siliconflow.cn/cn/api-reference/audio/upload-voice

post /uploads/audio/voice

Upload user-provided voice style, which can be in base64 encoding or file format. Refer to (https://docs.siliconflow.cn/capabilities/text-to-speech#2-2)

# 参考音频列表获取

Source: https://docs.siliconflow.cn/cn/api-reference/audio/voice-list

get /audio/voice/list

Get list of user-defined voice styles

# 取消batch任务

Source: https://docs.siliconflow.cn/cn/api-reference/batch/cancel-batch

post /batches/{batch_id}/cancel

This endpoint cancels a batch identified by its unique ID.

# 创建batch任务

Source: https://docs.siliconflow.cn/cn/api-reference/batch/create-batch

post /batches

Upload files

# 获取batch任务详情

Source: https://docs.siliconflow.cn/cn/api-reference/batch/get-batch

get /batches/{batch_id}

Retrieves a batch.

# 获取batch任务列表

Source: https://docs.siliconflow.cn/cn/api-reference/batch/get-batch-list

get /batches

List your organization's batches.

# 获取文件列表

Source: https://docs.siliconflow.cn/cn/api-reference/batch/get-file-list

get /files

Returns a list of files.

# 上传文件

Source: https://docs.siliconflow.cn/cn/api-reference/batch/upload-file

post /files

Upload files

# 创建文本对话请求

Source: https://docs.siliconflow.cn/cn/api-reference/chat-completions/chat-completions

post /chat/completions

Creates a model response for the given chat conversation.

# 创建嵌入请求

Source: https://docs.siliconflow.cn/cn/api-reference/embeddings/create-embeddings

post /embeddings

Creates an embedding vector representing the input text.

# 创建图片生成请求

Source: https://docs.siliconflow.cn/cn/api-reference/images/images-generations

post /images/generations

Creates an image response for the given prompt. The URL for the generated image is valid for one hour. Please make sure to download and store it promptly to avoid any issues due to URL expiration.

# 获取用户模型列表

Source: https://docs.siliconflow.cn/cn/api-reference/models/get-model-list

get /models

Retrieve models information.

# 创建重排序请求

Source: https://docs.siliconflow.cn/cn/api-reference/rerank/create-rerank

post /rerank

Creates a rerank request.

# 获取用户账户信息

Source: https://docs.siliconflow.cn/cn/api-reference/userinfo/get-user-info

get /user/info

Get user information including balance and status

# 获取视频生成链接请求

Source: https://docs.siliconflow.cn/cn/api-reference/videos/get_videos_status

post /video/status

Get the user-generated video. The URL for the generated video is valid for one hour. Please make sure to download and store it promptly to avoid any issues due to URL expiration.

# 创建视频生成请求

Source: https://docs.siliconflow.cn/cn/api-reference/videos/videos_submit

post /video/submit

Generate a video through the input prompt. This API returns the user's current request ID. The user needs to poll the status interface to get the specific video link. The generated result is valid for 10 minutes, so please retrieve the video link promptly.

# 实名认证

Source: https://docs.siliconflow.cn/cn/faqs/authentication

## 1. 为什么要进行实名认证?

《中华人民共和国网络安全法》 等法律法规要求:网络运营者为用户办理网络接入,在与用户签订协议或者确认提供服务时,应当要求用户提供真实身份信息。用户不提供真实身份信息的,网络运营者不得为其提供相关服务。

## 2. 如果不进行实名认证,会对账号产生什么影响?

如果不进行实名认证,账号将无法进行以下操作:

* 无法进行“账户充值”

* 无法申请“开具发票”

## 3. 个人实名和企业实名认证有哪些区别?

账号实名认证分为**个人实名认证**和**企业实名认证**两类:

* 个人实名认证:认证类型为个人,支持个人人脸识别认证。

* 企业实名认证:认证类型为企业(含普通企业、政府、事业单位、社会团体组织、个体工商户等),支持法人人脸识别认证、企业对公打款认证两种认证方式。

**实名认证类型对账号的影响:**

1. 影响账号的归属。

* 完成企业实名认证的账号**归属为企业**。

* 完成个人实名认证的账号**归属于个人**。

2. 影响账号的开票信息。

* 企业认证**可以开具企业抬头的增值税专用发票、增值税普通发票**。

* 个人认证**只能开具**个人抬头的增值税普通发票\*\*。

【注意事项】

* 实名认证信息对您的账号和资金安全等很重要,请**按实际情况**进行实名认证。

* 为了您的账号安全,企业用户不要进行个人实名认证。

* 当前账号只允许绑定一个认证主体,账号主体变更成功后原主体信息将与账号解绑。

## 4. 如何进行个人认证?

### 4.1 个人认证支持证件类型

个人认证支持证件类型有以下几种:

* 大陆身份证

* 港澳往来大陆通行证(回乡证)

* 台湾往来大陆通行证(台胞证)

* 港澳居民居住证

* 台湾居民居住证

* 外国人永久居留证

不具有以上证件的用户,暂时不支持线上个人认证,可以通过提交[表单](https://siliconflow.feishu.cn/share/base/form/shrcnF4a7pFS2eR4wJj9rneM7mc?auth_token=U7CK1RF-ddcuca83-7f21-437e-9ef2-208b950e9f7f-NN5W4\&ccm_open_type=form_v1_qrcode_share\&share_link_type=qrcode)的形式跟工作人员沟通,尝试进行其他方式认证。

### 4.2 个人认证流程

1. 登录 SiliconCloud 平台,点击[用户中心-实名认证](https://cloud.siliconflow.cn/account/authentication)。

2. 在实名认证页面,选择认证类型为"个人实名认证",然后填写个人信息。

3. 使用手机支付宝 App 扫描二维码,扫描后按照手机上的提示完成人脸识别认证,认证成功后,在网页端的弹窗上点击“已完成刷脸认证”。

4. 认证成功后您可以**修改认证信息**或者**变更为企业用户**,30 天内只可以完成一次变更或修改。

【注意事项】

* 实名认证直接影响账号的归属。如果是企业用户,请您进行企业实名认证,以免人员变动等因素引起的不必要纠纷。更多信息请参见个人实名认证和企业实名认证的区别。

* 根据相关法律法规,我们不对未满 14 周岁的个人提供在线实名认证服务。

## 5. 如何进行企业认证?

1. 登录 SiliconCloud 平台,点击“用户中心-实名认证”。

2. 在实名认证页面,选择认证类型为“企业实名认证”,然后选择认证方式。认证方式有以下两种:

* 选择法人人脸识别认证

1. 填写企业名称、统一社会信用代码、法人姓名和法人身份证号,勾选同意协议。

2. 法人使用手机支付宝 App 扫描二维码,扫描后按照手机上的提示完成人脸认证,验证成功即可完成认证。

* 选择企业对公打款认证

1. 填写企业名称、统一社会信用代码和法人姓名,勾选同意协议。

2. 填写企业对公银行卡号,填写对公银行(精确到支行)名称,选择具体的开户行,确认无误后点击获取验证金额。

3. 等待随机打款金额到账,通常在 10 分钟以内。

4. 打款成功后,请跟财务核实收到的 1 元以下随机金额,将该金额回填到该页面,核实无误后即可认证成功。

认证成功后您可以**修改认证信息**,30 天内只可进行一次修改。

# 错误处理

Source: https://docs.siliconflow.cn/cn/faqs/error-code

**1. 尝试获取 HTTP 错误代码,初步定位问题**

a. 在代码中,尽量把错误码和报错信息(message)打印出来,利用这些信息,可以定位大部分问题。

```shell

HTTP/1.1 400 Bad Request

Date: Thu, 19 Dec 2024 08:39:19 GMT

Content-Type: application/json; charset=utf-8

Content-Length: 87

Connection: keep-alive

{"code":20012,"message":"Model does not exist. Please check it carefully.","data":null}

```

* 常见错误代码及原因:

* **400**:参数不正确,请参考报错信息(message)修正不合法的请求参数;

* **401**:API Key 没有正确设置;

* **403**:权限不够,最常见的原因是该模型需要实名认证,其他情况参考报错信息(message);

* **429**:触发了 rate limits;参考报错信息(message)判断触发的是 `RPM /RPD / TPM / TPD / IPM / IPD` 中的具体哪一种,可以参考 [Rate Limits](https://docs.siliconflow.cn/rate-limits/rate-limit-and-upgradation) 了解具体的限流策略

* **504 / 503**:

* 一般是服务系统负载比较高,可以稍后尝试;

* 对于对话和文本转语音请求,可以尝试使用流式输出("stream" : true),参考 [流式输出](https://docs.siliconflow.cn/faqs/stream-mode);

* **500**:服务发生了未知的错误,可以联系相关人员进行排查

4. 认证成功后您可以**修改认证信息**或者**变更为企业用户**,30 天内只可以完成一次变更或修改。

【注意事项】

* 实名认证直接影响账号的归属。如果是企业用户,请您进行企业实名认证,以免人员变动等因素引起的不必要纠纷。更多信息请参见个人实名认证和企业实名认证的区别。

* 根据相关法律法规,我们不对未满 14 周岁的个人提供在线实名认证服务。

## 5. 如何进行企业认证?

1. 登录 SiliconCloud 平台,点击“用户中心-实名认证”。

2. 在实名认证页面,选择认证类型为“企业实名认证”,然后选择认证方式。认证方式有以下两种:

* 选择法人人脸识别认证

1. 填写企业名称、统一社会信用代码、法人姓名和法人身份证号,勾选同意协议。

2. 法人使用手机支付宝 App 扫描二维码,扫描后按照手机上的提示完成人脸认证,验证成功即可完成认证。

* 选择企业对公打款认证

1. 填写企业名称、统一社会信用代码和法人姓名,勾选同意协议。

2. 填写企业对公银行卡号,填写对公银行(精确到支行)名称,选择具体的开户行,确认无误后点击获取验证金额。

3. 等待随机打款金额到账,通常在 10 分钟以内。

4. 打款成功后,请跟财务核实收到的 1 元以下随机金额,将该金额回填到该页面,核实无误后即可认证成功。

认证成功后您可以**修改认证信息**,30 天内只可进行一次修改。

# 错误处理

Source: https://docs.siliconflow.cn/cn/faqs/error-code

**1. 尝试获取 HTTP 错误代码,初步定位问题**

a. 在代码中,尽量把错误码和报错信息(message)打印出来,利用这些信息,可以定位大部分问题。

```shell

HTTP/1.1 400 Bad Request

Date: Thu, 19 Dec 2024 08:39:19 GMT

Content-Type: application/json; charset=utf-8

Content-Length: 87

Connection: keep-alive

{"code":20012,"message":"Model does not exist. Please check it carefully.","data":null}

```

* 常见错误代码及原因:

* **400**:参数不正确,请参考报错信息(message)修正不合法的请求参数;

* **401**:API Key 没有正确设置;

* **403**:权限不够,最常见的原因是该模型需要实名认证,其他情况参考报错信息(message);

* **429**:触发了 rate limits;参考报错信息(message)判断触发的是 `RPM /RPD / TPM / TPD / IPM / IPD` 中的具体哪一种,可以参考 [Rate Limits](https://docs.siliconflow.cn/rate-limits/rate-limit-and-upgradation) 了解具体的限流策略

* **504 / 503**:

* 一般是服务系统负载比较高,可以稍后尝试;

* 对于对话和文本转语音请求,可以尝试使用流式输出("stream" : true),参考 [流式输出](https://docs.siliconflow.cn/faqs/stream-mode);

* **500**:服务发生了未知的错误,可以联系相关人员进行排查

b. 如果客户端没有输出相应的信息,可以考虑在命令行下运行 curl 命令 (以 LLM 模型为例):

```shell

curl --request POST \

--url https://api.siliconflow.cn/v1/chat/completions \

--header 'accept: application/json' \

--header 'authorization: Bearer 改成你的apikey' \

--header 'content-type: application/json' \

--data '

{

"model": "记得改模型",

"messages": [

{

"role": "user",

"content": "你好"

}

],

"max_tokens": 128

}' -i

```

**2. 可以尝试换一个模型,看看问题是否依旧**

**3. 如果开了代理,可以考虑将代理关闭后再尝试访问**

如遇其他问题,请点击[硅基流动MaaS线上需求收集表](https://m09tqret04o.feishu.cn/share/base/form/shrcnaamvQ4C7YKMixVnS32k1G3)反馈。

# 开具发票

Source: https://docs.siliconflow.cn/cn/faqs/invoice

## 1. 开票主体信息

开票主体:北京硅基流动科技有限公司

b. 如果客户端没有输出相应的信息,可以考虑在命令行下运行 curl 命令 (以 LLM 模型为例):

```shell

curl --request POST \

--url https://api.siliconflow.cn/v1/chat/completions \

--header 'accept: application/json' \

--header 'authorization: Bearer 改成你的apikey' \

--header 'content-type: application/json' \

--data '

{

"model": "记得改模型",

"messages": [

{

"role": "user",

"content": "你好"

}

],

"max_tokens": 128

}' -i

```

**2. 可以尝试换一个模型,看看问题是否依旧**

**3. 如果开了代理,可以考虑将代理关闭后再尝试访问**

如遇其他问题,请点击[硅基流动MaaS线上需求收集表](https://m09tqret04o.feishu.cn/share/base/form/shrcnaamvQ4C7YKMixVnS32k1G3)反馈。

# 开具发票

Source: https://docs.siliconflow.cn/cn/faqs/invoice

## 1. 开票主体信息

开票主体:北京硅基流动科技有限公司

## 2. 开票时效性

通常发票会在您申请开票后2个工作日内开具完成

## 3. 开票金额

仅`已消费金额`可以申请开具发票,充值未消费的充值余额不可开具发票,您可酌情申请退款;已开票金额不可重复开票。

## 4. 发票类型

目前平台仅提供数电发票

企业认证用户可以开具企业抬头的增值税专用发票/增值税普通发票;

个人认证只能开具个人抬头的增值税普通发票

## 5. 开票流程

### 5.1 用户自助开票

* 登陆 [SiliconCloud](https://cloud.siliconflow.cn/),进入到 [开具发票](https://cloud.siliconflow.cn/invoice) 页面

* 点击`申请开票`按钮,

* 在页面中填入`申请开票金额`

* 选择`费用名称`

* 选择`抬头名称和税号`

* 选择`发票类型`

* 勾选`发票接收方式`

* 点击`申请发票`按钮

* 已申请开具的发票会在发票记录中显示,开具成功后`下载`即可

### 5.2 用户登记后,平台开票

根据我国税收相关政策要求,发票抬头需与实名认证主体名称一致,个人账号无法开具机构抬头发票;如有需要,您可以[变更认证主体](https://cloud.siliconflow.cn/account/authentication);若完成认证确有困难,请通过[开具发票](https://cloud.siliconflow.cn/invoice)页面中的登记页进行登记。

# 上架指南

Source: https://docs.siliconflow.cn/cn/faqs/listing_guide

# 硅基流动AI应用合规上架指南

以下材料适用国家为:中国

## 背景依据

硅基流动 MaaS服务平台面向广大开发者提供相关开源大模型加速框架推理等技术支持,开发者开发的应用/小程序中通过硅基流动 MaaS服务平台接入了DeepSeek、通义千问等系列开源大模型。

若开发者想要将接入了开源大模型的应用或小程序上架到应用市场或小程序平台中,因应用市场或小程序平台的应用场景存在差异,可能需要开发者提供各种相关资料,本篇将基于法律合规要求引导各位开发者如何获取对应资料。

## 📌 关键提示

### 1. 责任提示

#### ⚠️ 行动前必读:

1. 建议您研读《生成式人工智能服务管理暂行办法》原文或咨询法律专家

2. 主动追踪政策变化,定期核验最新要求,并依据业务场景寻求专业法律意见

3. 您需独立承担应用/小程序的全部法律责任

### 2. 平台声明

#### 本文仅提供:

1. 备案材料清单与获取路径

2. 所接入模型的备案信息查询指引

3. 不构成任何法律意见

#### 重要法律定位:

即使使用本站模型服务,您仍是法定“服务提供者”,须履行包括:

① 内容审核 ② 用户保护 ③ 数据安全 ④ 标识规范 等全链条义务

硅基流动不保证您的小程序或APP 通过该方案可以 100% 通过审核,具体审核规则由对应的审核方负责。硅基流动仅可证明贵方在使用硅基流动的服务。

## 需备案的场景:

### 1、微信小程序上架场景

# 上架指南

Source: https://docs.siliconflow.cn/cn/faqs/listing_guide

# 硅基流动AI应用合规上架指南

以下材料适用国家为:中国

## 背景依据

硅基流动 MaaS服务平台面向广大开发者提供相关开源大模型加速框架推理等技术支持,开发者开发的应用/小程序中通过硅基流动 MaaS服务平台接入了DeepSeek、通义千问等系列开源大模型。

若开发者想要将接入了开源大模型的应用或小程序上架到应用市场或小程序平台中,因应用市场或小程序平台的应用场景存在差异,可能需要开发者提供各种相关资料,本篇将基于法律合规要求引导各位开发者如何获取对应资料。

## 📌 关键提示

### 1. 责任提示

#### ⚠️ 行动前必读:

1. 建议您研读《生成式人工智能服务管理暂行办法》原文或咨询法律专家

2. 主动追踪政策变化,定期核验最新要求,并依据业务场景寻求专业法律意见

3. 您需独立承担应用/小程序的全部法律责任

### 2. 平台声明

#### 本文仅提供:

1. 备案材料清单与获取路径

2. 所接入模型的备案信息查询指引

3. 不构成任何法律意见

#### 重要法律定位:

即使使用本站模型服务,您仍是法定“服务提供者”,须履行包括:

① 内容审核 ② 用户保护 ③ 数据安全 ④ 标识规范 等全链条义务

硅基流动不保证您的小程序或APP 通过该方案可以 100% 通过审核,具体审核规则由对应的审核方负责。硅基流动仅可证明贵方在使用硅基流动的服务。

## 需备案的场景:

### 1、微信小程序上架场景

## 获取备案信息的操作指引

### 步骤一:查询模型备案号

#### 模型备案主体信息示例:

DeepSeek:

查询地址:[https://beian.cac.gov.cn/#/searchResult](https://beian.cac.gov.cn/#/searchResult)

## 获取备案信息的操作指引

### 步骤一:查询模型备案号

#### 模型备案主体信息示例:

DeepSeek:

查询地址:[https://beian.cac.gov.cn/#/searchResult](https://beian.cac.gov.cn/#/searchResult)

| 模型名称 | 备案说明 | 备案号 | 链接 |

| --------------------- | ------------------- | --------------------------------- | --------------------------------------------------------------------------------------------------------------------- |

| Deepseek Chat | 生成式人工智能服务备案 | Beijing-DeepseekChat-202404280016 | [https://www.cac.gov.cn/2024-04/02/c\_1713729983803145.htm](https://www.cac.gov.cn/2024-04/02/c_1713729983803145.htm) |

| DeepSeek大语言模型算法 | 深度合成服务算法备案(服务技术支持者) | 网信算备110108970550101240011号 | [https://www.cac.gov.cn/2024-04/11/c\_1714509267496697.htm](https://www.cac.gov.cn/2024-04/11/c_1714509267496697.htm) |

| DeepSeekChat 求索对话生成算法 | 深度合成服务算法备案(服务提供者) | 网信算备330105747635301240017号 | [https://www.cac.gov.cn/2024-06/12/c\_1719783421546747.htm](https://www.cac.gov.cn/2024-06/12/c_1719783421546747.htm) |

| 大模型 | 算法名称 | 备案主体角色 | 备案主体 | 主要用途 | 备案号 |

| ---- | ------------- | ------- | ----------------- | -------------------------------------------------------------- | -------------------------- |

| 通义千问 | 达摩院交互式多能型合成算法 | 服务技术支持者 | 阿里巴巴达摩院(杭州)科技有限公司 | 应用于开放域多模态内容生成场景,服务于问答、咨询类的企业端客户,通过API提供根据用户输入生成多模态信息的功能。 | 网信算备330110507206401230035号 |

| 通义万相 | 达摩院图像合成算法 | 服务技术支持者 | 阿里巴巴达摩院(杭州)科技有限公司 | 应用于数字图像处理、计算机视觉、虚拟现实、人工智能等领域,在图像生成、图像增强、图像分割、图像识别等方面具有广泛的应用前景。 | 网信算备330110507206401230027号 |

| 通义万相 | 通义万相视频生成算法 | 服务技术支持者 | 通义云启(杭州)信息技术有限公司 | 应用于视频生成场景,服务于企业端客户,根据用户输入的文本或图像,生成符合广告媒体领域要求的视频。 | 网信算备330106003156001240091号 |

通义千问:

| 模型名称 | 备案说明 | 备案号 | 链接 |

| --------------------- | ------------------- | --------------------------------- | --------------------------------------------------------------------------------------------------------------------- |

| Deepseek Chat | 生成式人工智能服务备案 | Beijing-DeepseekChat-202404280016 | [https://www.cac.gov.cn/2024-04/02/c\_1713729983803145.htm](https://www.cac.gov.cn/2024-04/02/c_1713729983803145.htm) |

| DeepSeek大语言模型算法 | 深度合成服务算法备案(服务技术支持者) | 网信算备110108970550101240011号 | [https://www.cac.gov.cn/2024-04/11/c\_1714509267496697.htm](https://www.cac.gov.cn/2024-04/11/c_1714509267496697.htm) |

| DeepSeekChat 求索对话生成算法 | 深度合成服务算法备案(服务提供者) | 网信算备330105747635301240017号 | [https://www.cac.gov.cn/2024-06/12/c\_1719783421546747.htm](https://www.cac.gov.cn/2024-06/12/c_1719783421546747.htm) |

| 大模型 | 算法名称 | 备案主体角色 | 备案主体 | 主要用途 | 备案号 |

| ---- | ------------- | ------- | ----------------- | -------------------------------------------------------------- | -------------------------- |

| 通义千问 | 达摩院交互式多能型合成算法 | 服务技术支持者 | 阿里巴巴达摩院(杭州)科技有限公司 | 应用于开放域多模态内容生成场景,服务于问答、咨询类的企业端客户,通过API提供根据用户输入生成多模态信息的功能。 | 网信算备330110507206401230035号 |

| 通义万相 | 达摩院图像合成算法 | 服务技术支持者 | 阿里巴巴达摩院(杭州)科技有限公司 | 应用于数字图像处理、计算机视觉、虚拟现实、人工智能等领域,在图像生成、图像增强、图像分割、图像识别等方面具有广泛的应用前景。 | 网信算备330110507206401230027号 |

| 通义万相 | 通义万相视频生成算法 | 服务技术支持者 | 通义云启(杭州)信息技术有限公司 | 应用于视频生成场景,服务于企业端客户,根据用户输入的文本或图像,生成符合广告媒体领域要求的视频。 | 网信算备330106003156001240091号 |

通义千问:

### 步骤二:获取合作协议

#### 合作协议获取流程:

1. 确保您的账号完成了企业实名认证:认证链接:[https://cloud.siliconflow.cn/account/authentication](https://cloud.siliconflow.cn/account/authentication)

2. 添加我们企微客服:

### 步骤二:获取合作协议

#### 合作协议获取流程:

1. 确保您的账号完成了企业实名认证:认证链接:[https://cloud.siliconflow.cn/account/authentication](https://cloud.siliconflow.cn/account/authentication)

2. 添加我们企微客服:

3. 按照客服指引填写表单,我们将在 5 个工作日内提供相关合作协议电子签约版本。

#### 需要注意:

1. 您的小程序/应用主体名称,需要和签订合作协议以及硅基流动账号实名认证企业为同一个,不进行硅基流动企业实名认证则无法提供相应协议。

2. 准确填写您的小程序应用场景和应用模型,将有助于您通过审核。

#### 主要法律依据:

1. 《生成式人工智能服务管理暂行办法》

[https://www.moj.gov.cn/pub/sfbgw/flfggz/flfggzbmgz/202401/t20240109\_493171.html](https://www.moj.gov.cn/pub/sfbgw/flfggz/flfggzbmgz/202401/t20240109_493171.html)

2. 《具有舆论属性或社会动员能力的互联网信息服务安全评估规定》

[https://www.gov.cn/zhengce/zhengceku/2018-11/30/content\_5457763.htm](https://www.gov.cn/zhengce/zhengceku/2018-11/30/content_5457763.htm)

3. 《互联网信息服务算法推荐管理规定》

[https://www.gov.cn/zhengce/zhengceku/2022-01/04/content\_5666429.htm](https://www.gov.cn/zhengce/zhengceku/2022-01/04/content_5666429.htm)

# 模型问题

Source: https://docs.siliconflow.cn/cn/faqs/misc

## 1. 模型输出乱码

目前看到部分模型在不设置参数的情况下,容易出现乱码,遇到上述情况,可以尝试设置`temperature`,`top_k`,`top_p`,`frequency_penalty`这些参数。

对应的 payload 修改为如下形式,不同语言酌情调整

```python

payload = {

"model": "Qwen/Qwen2.5-Math-72B-Instruct",

"messages": [

{

"role": "user",

"content": "1+1=?",

}

],

"max_tokens": 200, # 按需添加

"temperature": 0.7, # 按需添加

"top_k": 50, # 按需添加

"top_p": 0.7, # 按需添加

"frequency_penalty": 0 # 按需添加

}

```

## 2. 关于`max_tokens`说明

平台提供的LLM模型中,

* max\_tokens 限制为 `16384` 的模型:

* deepseek-ai/DeepSeek-R1

* Pro/deepseek-ai/DeepSeek-R1

* Pro/deepseek-ai/DeepSeek-R1-0120

* Qwen/QVQ-72B-Preview

* deepseek-ai/DeepSeek-R1-Distill-Qwen-32B

* deepseek-ai/DeepSeek-R1-Distill-Qwen-14B

* deepseek-ai/DeepSeek-R1-Distill-Qwen-7B

* deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B

* Pro/deepseek-ai/DeepSeek-R1-Distill-Qwen-7B

* Pro/deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B

* max\_tokens 限制为 `8192` 的模型:

* Qwen/QwQ-32B-Preview

* max\_tokens 限制为 `4096`的模型:

* 除上述提到的其他LLM模型的

如有特殊需求,建议您点击[硅基流动MaaS线上需求收集表](\(https://m09tqret04o.feishu.cn/share/base/form/shrcnaamvQ4C7YKMixVnS32k1G3\))进行反馈。

## 3. 关于`context_length`说明

不同的LLM模型,`context_length`是有差别的,具体可以在[模型广场](https://cloud.siliconflow.cn/models)上搜索对应的模型,查看模型具体信息。

{/*## 4. 关于 `DeepSeek-R1` 和 `DeepSeek-V3` 模型调用返回 `429` 说明

1. `未实名用户`用户:每天仅能访问 `100次`。如果当天访问次数超过 `100次`,将收到 `429` 错误,并提示 "Details: RPD limit reached. Could only send 100 requests per day without real name verification",可以通过实名解锁更高的 Rate Limit。

2. `实名用户`:拥有更高的 Rate Limit,具体值参考[模型广场](https://cloud.siliconflow.cn/models)

如果访问次数超过这些限制,也会收到 `429` 错误。

*/}

## 4. Pro 和非 Pro 模型有什么区别

1. 对于部分模型,平台同时提供免费版和收费版。免费版按原名称命名;收费版在名称前加上“Pro/”以示区分。免费版的 Rate Limits 固定,收费版的 Rate Limits 可变,具体规则请参考:[Rate Limits](https://docs.siliconflow.cn/cn/userguide/rate-limits/rate-limit-and-upgradation)。

2. 对于 `DeepSeek R1` 和 `DeepSeek V3` 模型,平台根据`支付方式`的不同要求区分命名。`Pro 版`仅支持`充值余额`支付,`非 Pro 版`支持`赠费余额`和`充值余额`支付。

## 5. 语音模型中,对用户自定义音色有时间音质要求么

* cosyvoice2 上传音色必须小于30s

## 6. 模型输出截断问题

可以从以下几方面进行问题的排查:

* 通过API请求时候,输出截断问题排查:

* max\_tokens设置:max\_token设置到合适值,输出大于max\_token的情况下,会被截断,deepseek R1系列的max\_token最大可设置为16384。

* 设置流式输出请求:非流式请求时候,输出内容比较长的情况下,容易出现504超时。

* 设置客户端超时时间:把客户端超时时间设置大一些,防止未输出完成,达到客户端超时时间被截断。

* 通过第三方客户端请求,输出截断问题排查:

* CherryStdio 默认的 max\_tokens 是 4096,用户可以通过设置,打开“开启消息长度限制”的开关,将max\_token设置到合适值

3. 按照客服指引填写表单,我们将在 5 个工作日内提供相关合作协议电子签约版本。

#### 需要注意:

1. 您的小程序/应用主体名称,需要和签订合作协议以及硅基流动账号实名认证企业为同一个,不进行硅基流动企业实名认证则无法提供相应协议。

2. 准确填写您的小程序应用场景和应用模型,将有助于您通过审核。

#### 主要法律依据:

1. 《生成式人工智能服务管理暂行办法》

[https://www.moj.gov.cn/pub/sfbgw/flfggz/flfggzbmgz/202401/t20240109\_493171.html](https://www.moj.gov.cn/pub/sfbgw/flfggz/flfggzbmgz/202401/t20240109_493171.html)

2. 《具有舆论属性或社会动员能力的互联网信息服务安全评估规定》

[https://www.gov.cn/zhengce/zhengceku/2018-11/30/content\_5457763.htm](https://www.gov.cn/zhengce/zhengceku/2018-11/30/content_5457763.htm)

3. 《互联网信息服务算法推荐管理规定》

[https://www.gov.cn/zhengce/zhengceku/2022-01/04/content\_5666429.htm](https://www.gov.cn/zhengce/zhengceku/2022-01/04/content_5666429.htm)

# 模型问题

Source: https://docs.siliconflow.cn/cn/faqs/misc

## 1. 模型输出乱码

目前看到部分模型在不设置参数的情况下,容易出现乱码,遇到上述情况,可以尝试设置`temperature`,`top_k`,`top_p`,`frequency_penalty`这些参数。

对应的 payload 修改为如下形式,不同语言酌情调整

```python

payload = {

"model": "Qwen/Qwen2.5-Math-72B-Instruct",

"messages": [

{

"role": "user",

"content": "1+1=?",

}

],

"max_tokens": 200, # 按需添加

"temperature": 0.7, # 按需添加

"top_k": 50, # 按需添加

"top_p": 0.7, # 按需添加

"frequency_penalty": 0 # 按需添加

}

```

## 2. 关于`max_tokens`说明

平台提供的LLM模型中,

* max\_tokens 限制为 `16384` 的模型:

* deepseek-ai/DeepSeek-R1

* Pro/deepseek-ai/DeepSeek-R1

* Pro/deepseek-ai/DeepSeek-R1-0120

* Qwen/QVQ-72B-Preview

* deepseek-ai/DeepSeek-R1-Distill-Qwen-32B

* deepseek-ai/DeepSeek-R1-Distill-Qwen-14B

* deepseek-ai/DeepSeek-R1-Distill-Qwen-7B

* deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B

* Pro/deepseek-ai/DeepSeek-R1-Distill-Qwen-7B

* Pro/deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B

* max\_tokens 限制为 `8192` 的模型:

* Qwen/QwQ-32B-Preview

* max\_tokens 限制为 `4096`的模型:

* 除上述提到的其他LLM模型的

如有特殊需求,建议您点击[硅基流动MaaS线上需求收集表](\(https://m09tqret04o.feishu.cn/share/base/form/shrcnaamvQ4C7YKMixVnS32k1G3\))进行反馈。

## 3. 关于`context_length`说明

不同的LLM模型,`context_length`是有差别的,具体可以在[模型广场](https://cloud.siliconflow.cn/models)上搜索对应的模型,查看模型具体信息。

{/*## 4. 关于 `DeepSeek-R1` 和 `DeepSeek-V3` 模型调用返回 `429` 说明

1. `未实名用户`用户:每天仅能访问 `100次`。如果当天访问次数超过 `100次`,将收到 `429` 错误,并提示 "Details: RPD limit reached. Could only send 100 requests per day without real name verification",可以通过实名解锁更高的 Rate Limit。

2. `实名用户`:拥有更高的 Rate Limit,具体值参考[模型广场](https://cloud.siliconflow.cn/models)

如果访问次数超过这些限制,也会收到 `429` 错误。

*/}

## 4. Pro 和非 Pro 模型有什么区别

1. 对于部分模型,平台同时提供免费版和收费版。免费版按原名称命名;收费版在名称前加上“Pro/”以示区分。免费版的 Rate Limits 固定,收费版的 Rate Limits 可变,具体规则请参考:[Rate Limits](https://docs.siliconflow.cn/cn/userguide/rate-limits/rate-limit-and-upgradation)。

2. 对于 `DeepSeek R1` 和 `DeepSeek V3` 模型,平台根据`支付方式`的不同要求区分命名。`Pro 版`仅支持`充值余额`支付,`非 Pro 版`支持`赠费余额`和`充值余额`支付。

## 5. 语音模型中,对用户自定义音色有时间音质要求么

* cosyvoice2 上传音色必须小于30s

## 6. 模型输出截断问题

可以从以下几方面进行问题的排查:

* 通过API请求时候,输出截断问题排查:

* max\_tokens设置:max\_token设置到合适值,输出大于max\_token的情况下,会被截断,deepseek R1系列的max\_token最大可设置为16384。

* 设置流式输出请求:非流式请求时候,输出内容比较长的情况下,容易出现504超时。

* 设置客户端超时时间:把客户端超时时间设置大一些,防止未输出完成,达到客户端超时时间被截断。

* 通过第三方客户端请求,输出截断问题排查:

* CherryStdio 默认的 max\_tokens 是 4096,用户可以通过设置,打开“开启消息长度限制”的开关,将max\_token设置到合适值

## 7. 模型使用过程中返回429错误排查

可以从以下几方面进行问题的排查:

* 普通用户:检查用户等级及模型对应的 Rate Limits(速率限制)。如果请求超出 Rate Limits,建议稍后再尝试请求。

* 专属实例用户:专属实例通常没有 Rate Limits 限制。如果出现 429 错误,首先确认是否调用了专属实例的正确模型名称,并检查使用的 api\_key 是否与专属实例匹配。

## 8. 已充值成功,仍然提示账户余额不足

可以从以下几方面进行问题的排查:

* 确认使用的 api\_key 是否与刚刚充值的账户匹配。

* 如果 api\_key 无误,可能是充值过程中存在网络延迟,建议等待几分钟后再重试。

## 9. 已实名认证,还是无法访问部分模型

可以从以下几方面进行问题的排查:

* 确认使用的 api\_key 是否与刚刚完成实名认证的账户匹配。

* 如果 api\_key 无误,可以进入[实名认证](https://cloud.siliconflow.cn/account/authentication)页面,检查认证状态。如果状态显示为“认证中”,可以尝试取消并重新进行认证。

如遇其他问题,请点击[硅基流动MaaS线上需求收集表](https://m09tqret04o.feishu.cn/share/base/form/shrcnaamvQ4C7YKMixVnS32k1G3)反馈。

# 财务问题

Source: https://docs.siliconflow.cn/cn/faqs/misc_finance

## 1. 如何充值

1. 电脑端登录 [SiliconCloud](https://cloud.siliconflow.cn/) 官网。

2. 点击左侧边栏 “[实名认证](https://cloud.siliconflow.cn/account/authentication)” 进行认证。

3. 点击左侧边栏 “[余额充值](https://cloud.siliconflow.cn/expensebill)” 进行充值。

## 7. 模型使用过程中返回429错误排查

可以从以下几方面进行问题的排查:

* 普通用户:检查用户等级及模型对应的 Rate Limits(速率限制)。如果请求超出 Rate Limits,建议稍后再尝试请求。

* 专属实例用户:专属实例通常没有 Rate Limits 限制。如果出现 429 错误,首先确认是否调用了专属实例的正确模型名称,并检查使用的 api\_key 是否与专属实例匹配。

## 8. 已充值成功,仍然提示账户余额不足

可以从以下几方面进行问题的排查:

* 确认使用的 api\_key 是否与刚刚充值的账户匹配。

* 如果 api\_key 无误,可能是充值过程中存在网络延迟,建议等待几分钟后再重试。

## 9. 已实名认证,还是无法访问部分模型

可以从以下几方面进行问题的排查:

* 确认使用的 api\_key 是否与刚刚完成实名认证的账户匹配。

* 如果 api\_key 无误,可以进入[实名认证](https://cloud.siliconflow.cn/account/authentication)页面,检查认证状态。如果状态显示为“认证中”,可以尝试取消并重新进行认证。

如遇其他问题,请点击[硅基流动MaaS线上需求收集表](https://m09tqret04o.feishu.cn/share/base/form/shrcnaamvQ4C7YKMixVnS32k1G3)反馈。

# 财务问题

Source: https://docs.siliconflow.cn/cn/faqs/misc_finance

## 1. 如何充值

1. 电脑端登录 [SiliconCloud](https://cloud.siliconflow.cn/) 官网。

2. 点击左侧边栏 “[实名认证](https://cloud.siliconflow.cn/account/authentication)” 进行认证。

3. 点击左侧边栏 “[余额充值](https://cloud.siliconflow.cn/expensebill)” 进行充值。

## 2. 赠送余额有期限吗

赠送余额目前没有有效期限制。

## 3. 如何查询使用账单

1. 电脑端登录 [SiliconCloud](https://cloud.siliconflow.cn/) 官网。

2. 点击左侧边栏 “[费用账单](https://cloud.siliconflow.cn/bills)” 了解。

## 2. 赠送余额有期限吗

赠送余额目前没有有效期限制。

## 3. 如何查询使用账单

1. 电脑端登录 [SiliconCloud](https://cloud.siliconflow.cn/) 官网。

2. 点击左侧边栏 “[费用账单](https://cloud.siliconflow.cn/bills)” 了解。

如您需要开发票,请根据 [开具发票](https://docs.siliconflow.cn/faqs/invoice) 发邮件,我们会根据您的实际消耗费用开发票。

## 4. 模型微调的计费规则

模型微调功能会按照训练和推理两个不同场景独立计费。

费用查看路径:[模型微调](https://cloud.siliconflow.cn/fine-tune) - 新建微调任务,选择所需的“基础模型”后,页面会显示对应的“微调训练价格”和“微调推理价格”。

## 4. 模型微调的计费规则

模型微调功能会按照训练和推理两个不同场景独立计费。

费用查看路径:[模型微调](https://cloud.siliconflow.cn/fine-tune) - 新建微调任务,选择所需的“基础模型”后,页面会显示对应的“微调训练价格”和“微调推理价格”。

如遇其他问题,请点击[硅基流动MaaS线上需求收集表](https://m09tqret04o.feishu.cn/share/base/form/shrcnaamvQ4C7YKMixVnS32k1G3)反馈。

# Rate Limits 问题

Source: https://docs.siliconflow.cn/cn/faqs/misc_rate

## 1. 免费模型如何提升 Rate Limits

* 所有免费模型的 Rate Limits 是固定的。

如遇其他问题,请点击[硅基流动MaaS线上需求收集表](https://m09tqret04o.feishu.cn/share/base/form/shrcnaamvQ4C7YKMixVnS32k1G3)反馈。

# Rate Limits 问题

Source: https://docs.siliconflow.cn/cn/faqs/misc_rate

## 1. 免费模型如何提升 Rate Limits

* 所有免费模型的 Rate Limits 是固定的。

* 对于部分模型,平台同时提供免费版和收费版。免费版按照原名称命名;收费版会在名称前加上“Pro/”以示区分。

* 收费版模型支持通过月消费金额解锁更宽松的 Rate Limits,也可以单独购买[等级包](https://cloud.siliconflow.cn/package)快速提升 Rate Limits。

* 对于 `DeepSeek R1` 和 `DeepSeek V3` 模型,Rate Limits 是固定的。

如遇其他问题,请点击[硅基流动MaaS线上需求收集表](https://m09tqret04o.feishu.cn/share/base/form/shrcnaamvQ4C7YKMixVnS32k1G3)反馈。

# 使用问题

Source: https://docs.siliconflow.cn/cn/faqs/misc_use

## 1. 如何注销账号

请发送您的账户 ID和联系方式,到[contact@siliconflow.cn](mailto:contact@siliconflow.cn) 核实后将在 15 个工作日内处理。

## 2. 如何邀请

1. 电脑端登录 [SiliconCloud](https://cloud.siliconflow.cn/) 官网。

2. 点击左侧边栏 “[我的邀请](https://cloud.siliconflow.cn/invitation)” — “复制邀请链接”。

3. 分享邀请信息。

4. 邀请成功的具体信息会显示在 “[我的邀请](https://cloud.siliconflow.cn/invitation)”页面。

有三种邀请方式供选择:二维码、邀请码和邀请链接。

## 3. 为什么我的手机号无法注册或绑定?

为保障平台用户的账户安全与服务体验,平台对部分可能存在安全风险的手机号号段(如部分虚拟运营商号段)做了注册与绑定限制。此举旨在为您提供更加安全、稳定的服务环境,降低因恶意行为对正常使用可能带来的干扰和影响。

建议您使用中国大陆常见运营商(如中国移动、中国联通、中国电信)所提供的常规手机号进行注册或绑定操作。

如您使用的是正规手机号但仍出现提示,建议尝试更换号码;如仍有疑问,可添加小助手微信,我们将及时协助您解决。

## 3. 为什么我的手机号无法注册或绑定?

为保障平台用户的账户安全与服务体验,平台对部分可能存在安全风险的手机号号段(如部分虚拟运营商号段)做了注册与绑定限制。此举旨在为您提供更加安全、稳定的服务环境,降低因恶意行为对正常使用可能带来的干扰和影响。

建议您使用中国大陆常见运营商(如中国移动、中国联通、中国电信)所提供的常规手机号进行注册或绑定操作。

如您使用的是正规手机号但仍出现提示,建议尝试更换号码;如仍有疑问,可添加小助手微信,我们将及时协助您解决。

如遇其他问题,请点击[硅基流动MaaS线上需求收集表](https://m09tqret04o.feishu.cn/share/base/form/shrcnaamvQ4C7YKMixVnS32k1G3)反馈。

# 流式输出

Source: https://docs.siliconflow.cn/cn/faqs/stream-mode

## 1. 在 python 中使用流式输出

### 1.1 基于 OpenAI 库的流式输出

在一般场景中,推荐您使用 OpenAI 的库进行流式输出。

```python

from openai import OpenAI

client = OpenAI(

base_url='https://api.siliconflow.cn/v1',

api_key='your-api-key'

)

# 发送带有流式输出的请求

response = client.chat.completions.create(

model="deepseek-ai/DeepSeek-V2.5",

messages=[

{"role": "user", "content": "SiliconCloud公测上线,每用户送3亿token 解锁开源大模型创新能力。对于整个大模型应用领域带来哪些改变?"}

],

stream=True # 启用流式输出

)

# 逐步接收并处理响应

for chunk in response:

if not chunk.choices:

continue

if chunk.choices[0].delta.content:

print(chunk.choices[0].delta.content, end="", flush=True)

if chunk.choices[0].delta.reasoning_content:

print(chunk.choices[0].delta.reasoning_content, end="", flush=True)

```

### 1.2 基于 requests 库的流式输出

如果您有非 openai 的场景,如您需要基于 request 库使用 siliconcloud API,请您注意:

除了 payload 中的 stream 需要设置外,request 请求的参数也需要设置stream = True, 才能正常按照 stream 模式进行返回。

```python

from openai import OpenAI

import requests

import json

url = "https://api.siliconflow.cn/v1/chat/completions"

payload = {

"model": "deepseek-ai/DeepSeek-V2.5", # 替换成你的模型

"messages": [

{

"role": "user",

"content": "SiliconCloud公测上线,每用户送3亿token 解锁开源大模型创新能力。对于整个大模型应用领域带来哪些改变?"

}

],

"stream": True # 此处需要设置为stream模式

}

headers = {

"accept": "application/json",

"content-type": "application/json",

"authorization": "Bearer your-api-key"

}

response = requests.post(url, json=payload, headers=headers, stream=True) # 此处request需要指定stream模式

# 打印流式返回信息

if response.status_code == 200:

full_content = ""

full_reasoning_content = ""

for chunk in response.iter_lines():

if chunk:

chunk_str = chunk.decode('utf-8').replace('data: ', '')

if chunk_str != "[DONE]":

chunk_data = json.loads(chunk_str)

delta = chunk_data['choices'][0].get('delta', {})

content = delta.get('content', '')

reasoning_content = delta.get('reasoning_content', '')

if content:

print(content, end="", flush=True)

full_content += content

if reasoning_content:

print(reasoning_content, end="", flush=True)

full_reasoning_content += reasoning_content

else:

print(f"请求失败,状态码:{response.status_code}")

```

## 2. curl 中使用流式输出

curl 命令的处理机制默认情况下,curl 会缓冲输出流,所以即使服务器分块(chunk)发送数据,也需要等缓冲区填满或连接关闭后才看到内容。传入 `-N`(或 `--no-buffer`)选项,可以禁止此缓冲,让数据块立即打印到终端,从而实现流式输出。

```bash

curl -N -s \

--request POST \

--url https://api.siliconflow.cn/v1/chat/completions \

--header 'Authorization: Bearer token' \

--header 'Content-Type: application/json' \

--data '{

"model": "Qwen/Qwen2.5-72B-Instruct",

"messages": [

{"role":"user","content":"有诺贝尔数学奖吗?"}

],

"stream": true

}'

```

# 主体变更协议

Source: https://docs.siliconflow.cn/cn/legals/agreement-for-account-ownership-transfer

更新日期:2025年2月12日

在您正式提交账号主体变更申请前,请您务必认真阅读本协议。**本协议将通过加粗或加下划线的形式提示您特别关注对您的权利及义务将产生重要影响的相应条款。** 如果您对本协议的条款有疑问的,请向[contact@siliconflow.cn](mailto:contact@siliconflow.cn)咨询,如果您不同意本协议的内容,或者无法准确理解Siliconcloud平台(本平台)对条款的解释,请不要进行后续操作。

当您通过网络页面直接确认、接受引用本页面链接及提示遵守内容、签署书面协议、以及本平台认可的其他方式,或以其他法律法规或惯例认可的方式选择接受本协议,即表示您与本平台已达成协议,并同意接受本协议的全部约定内容。自本协议约定的生效之日起,本协议对您具有法律约束力。

**请您务必在接受本协议,且确信通过账号主体变更的操作,能够实现您所希望的目的,且您能够接受因本次变更行为的相关后果与责任后,再进行后续操作。**

## 一、定义和解释

1.1 “本平台官网”:是指包含域名为[https://siliconflow.cn的网站。](https://siliconflow.cn的网站。)

1.2 “本平台账号”:是指本平台分配给注册用户的数字ID,以下简称为“本平台账号”、“账号”。

1.3 “本平台账号持有人”,是指注册、持有并使用本平台账号的用户。

已完成实名认证的账号,除有相反证据外,本平台将根据用户的实名认证信息来确定账号持有人,如用户填写信息与实名认证主体信息不同的,以实名认证信息为准;未完成实名认证的账号,本平台将根据用户的填写信息,结合其他相关因素合理判断账号持有人。

1.4 “账号实名认证主体变更”:是指某一本平台账号的实名认证主体(原主体),变更为另一实名认证主体(新主体),本协议中简称为“账号主体变更”。

1.5 本协议下的“账号主体变更”程序、后果,仅适用于依据账号原主体申请发起、且被账号新主体接受的本平台账号实名认证主体变更情形。

## 二. 协议主体、内容与生效

**2.1** 本协议是特定本平台账号的账号持有人(“您”、“原主体”)与本平台之间,就您申请将双方之前就本次申请主体变更的本平台账号所达成的《SiliconCloud平台产品用户协议》的权利义务转让给第三方,及相关事宜所达成的一致条款。

**2.2 本协议为附生效条件的协议,仅在以下四个条件同时满足的情况下,** 才对您及本平台产生法律效力:

2.2.1 您所申请变更的本平台账号已完成了实名认证,且您为该实名认证主体;

2.2.2 本平台审核且同意您的账号主体变更申请;

2.2.3 您申请将账号下权利义务转让给第三方(“新主体”),且其同意按照《SiliconCloud平台产品用户协议》的约定继续履行相应的权利及义务;

2.2.4 新主体仅为企业主体,不能为自然人主体。

**2.3 您与新主体就账号下所有的产品、服务、资金、债权、债务等(统称为“账号下资源”)转让等相关事项,由您与新主体之间另外自行约定。但如果您与新主体之间的约定如与本协议约定冲突的,应优先适用本协议的约定。**

## 三. 变更的条件及程序

**3.1** 本平台仅接受符合以下条件下的账号主体变更申请;

3.1.1 由于原账号主体发生合并、分立、重组、解散、死亡等原因,需要进行账号主体变更的;

3.1.2 根据生效判决、裁定、裁决、决定等生效法律文书,需要账号主体变更的;

3.1.3 账号实际持有人与账号实名认证主体不一致,且提供了明确证明的;

3.1.4 根据法律法规规定,应当进行账号主体变更的;

3.1.5 本平台经过审慎判断,认为可以进行账号主体变更的其他情形。

**3.2** 您发起账号主体变更,应遵循如下程序要求:

3.2.1 您应在申请变更的本平台账号下发起账号主体变更申请;

3.2.2 本平台有权通过手机号、人脸识别等进行二次验证、要求您出具授权证明(当您通过账号管理人发起变更申请时)、以及其他本平台认为有必要的材料,确认本次申请账号主体变更的行为确系您本人意愿;

3.2.3 您应同意本协议的约定,接受本协议对您具有法律约束力;

3.2.4 您应遵守与账号主体变更相关的其他本平台规则、制度等的要求。

**3.3 您理解并同意,**

**3.3.1 在新主体确认接受且完成实名认证前,您可以撤回、取消本账号主体变更流程;**

**3.3.2 当新主体确认接受且完成实名认证后,您的撤销或取消请求本平台将不予支持;**

**3.3.3 且您有义务配合新主体完成账号管理权的转交。**

**3.3.4 在您进行实名认证主体变更期间,本账号下的登录和操作行为均视为您的行为,您应注意和承担账号的操作风险。**

**3.4 您理解并同意,如果发现以下任一情形的,本平台有权随时终止账号主体变更程序或采取相应处理措施:**

**3.4.1 第三方对该账号发起投诉,且尚未处理完毕的;**

**3.4.2 该账号正处于国家主管部门的调查中;**

**3.4.3 该账号正处于诉讼、仲裁或其他法律程序中;**

**3.4.4 该账号下存在与本平台的信控关系、伙伴关系等与原主体身份关联的合作关系的;**

**3.4.5 存在其他可能损害国家、社会利益,或者损害本平台或其他第三方权利的情形的;**

**3.4.6 该账号因存在频繁变更引起的账号纠纷或账号归属不明确的情形。**

## 四. 账号主体变更的影响

**4.1** 当您的账号主体变更申请经本平台同意,且新主体确认并完成实名认证后,该账号主体将完成变更,变更成功以本平台系统记录为准,变更成功后会对您产生如下后果:

**4.1.1您本账号下的权益转让给变更后的实名主体,权益包括不限于账号控制权、账号下已开通的服务、账号下未消耗的充值金额等;**

4.1.2 该账号及该账号下的全部资源的归属权全部转由新主体拥有。**但本平台有权终止,原主体通过该账号与本平台另行达成的关于优惠政策、信控、伙伴合作等相关事项的合作协议,或与其他本平台账号之间存在的关联关系等;**

**4.1.3 本平台不接受您以和新主体之间的协议为由或以其他理由,要求将该账号下一项或多项业务、权益转移给您指定的其他账号的要求;**

**4.1.4 本平台有权拒绝您以和新主体之间存在纠纷为由或以其他理由,要求撤销该账号主体变更的请求;**

**4.1.5 本平台有权在您与新主体之间就账号管理权发生争议或纠纷时,采取相应措施使得新主体获得该账号的实际管理权。**

**4.2 您理解并确认,账号主体变更并不代表您自变更之时起已对该账号下的所有行为和责任得到豁免或减轻:**

**4.2.1 您仍应对账号主体变更前,该账号下发生的所有行为承担责任;**

**4.2.2 您还需要对于变更之前已经产生,变更之后继续履行的合同及其他事项,对新主体在变更之后的履行行为及后果承担连带责任。**

## 五. 双方权利与义务

**5.1** 您应承诺并保证,

5.1.1 您在账号主体变更流程中所填写的内容及提交的资料均真实、准确、有效,且不存在任何误导或可能误导本平台同意接受该项账号主体变更申请的行为;

5.1.2 您不存在利用本平台的账号主体变更服务进行任何违反法律、法规、部门规章和国家政策等,或侵害任何第三方权利的行为;

**5.1.3 您进行账号主体变更的操作不会置本平台于违约或者违法的境地。因该账号主体变更行为而产生的任何纠纷、争议、损失、侵权、违约责任等,本平台不承担法律明确规定外的责任。**

**您进一步承诺,如上述原因给本平台造成损失的,您应向本平台承担相应赔偿责任。**

**5.2** 您理解并同意,

5.2.1 本平台有权在您发起申请后的任一时刻,要求您提供书面材料或其他证明,证明您有权进行变更账号主体的操作;

5.2.2 本平台有权依据自己谨慎的判断来确定您的申请是否符合法律法规或政策的规定及账号协议的约定,如存在违法违规或其他不适宜变更的情形的,本平台有权拒绝;

5.2.3 本平台有权记录账号实名认证主体变更前后的账号主体、交易流水、合同等相关信息,以遵守法律法规的规定,以及维护自身的合法权益;

5.2.4 如果您存在违反本协议第5.1条的行为的,本平台一经发现,有权直接终止账号主体变更流程,或者撤销已完成的账号主体变更操作,将账号主体恢复为没有进行变更前的状态。

## 六. 附则

**6.1** 您理解并接受,本协议的订立、执行和解释及争议的解决均应适用中华人民共和国法律,与法律规定不一致或存在冲突的,该不一致或冲突条款不具有法律约束力。

**6.2** 就本协议内容或其执行发生任何争议,双方应进行友好协商;协商不成时,任一方均可向被告方所在地有管辖权的人民法院提起诉讼。

**6.3** 本协议如果与双方以前签署的有关条款或者本平台的有关陈述不一致或者相抵触的,以本协议约定为准。

**您在此再次保证已经完全阅读并理解了上述《申请账号主体变更协议》,并自愿正式进入账号主体变更的后续流程,接受上述条款的约束。**

# 隐私政策

Source: https://docs.siliconflow.cn/cn/legals/privacy-policy

更新日期:2025年02月27日

欢迎您使用SiliconCloud高性价比的GenAI开放平台。北京硅基流动科技有限公司及其关联方(**“硅基流动”或“我们”**)非常重视用户(**“您”**)信息的保护。您在注册、登录、使用[https://siliconflow.cn](https://siliconflow.cn) (**“本平台”**)时,我们收集并保存您基于注册和正常使用本平台功能所需的相关**用户信息**(定义见 1.1)。我们并不收集或保存您使用本平台过程中与开源模型、第三方网站、软件、应用程序或服务之间的**交互数据**(定义见 1.4.1)“用户信息”和“交互数据”的核心差异对比见脚注1。

如我们的服务可能包含指向第三方网站、应用程序或服务的链接,本政策不适用于第三方提供的任何产品、服务、网站或内容。如您(或您的公司等经营实体)借助我们的产品或服务为您(或您的公司等经营实体)的客户提供服务,您(或您的公司等经营实体)需要自行制定符合交易场景及相关法律的隐私政策。如您(或您的公司等经营实体)同意借用我们的产品或服务访问第三方网站、应用程序或服务的链接,您(或您的公司等经营实体)需要自行遵守第三方网站、应用程序或服务的相关政策。

我们希望通过本隐私政策向您介绍我们对您的用户信息和交互数据的处理方式。在您开始使用我们的服务前,请您务必先仔细阅读和理解本政策,特别应重点阅读我们以粗体标识的条款,确保您充分理解和同意后再开始使用。如果您不同意本隐私政策,您应当立即停止使用服务。当您选择使用服务时,将视为您接受和认可我们按照本隐私政策对您的相关信息进行处理。

# 概述

本隐私政策将帮助您了解:

1. 我们如何收集和使用您的用户信息

2. 对Cookie和同类技术的使用

3. 我们如何存储您的用户信息

4. 我们如何共享、转让、公开披露您的信息

5. 我们如何保护您的信息安全

6. 我们如何管理您的用户信息

7. 未成年人使用条款

8. 隐私政策的修订和通知

9. 适用范围

## 1. 我们如何收集和使用您的用户信息

### 1.1 我们主动收集您的用户信息

为了保证您正常使用我们的平台,且在法律允许的情况下,我们会在如下场景和业务活动中收集您在使用服务时主动提供的信息,以及我们通过自动化手段收集您在使用服务过程中产生的信息,包括但不限于您提供的个人信息(**“用户信息”**)。**特别提示您注意,如信息无法单独或结合其他信息识别到您的个人身份且与您无关,其不属于法律意义上您的个人信息**;当您的信息可以单独或结合其他信息识别到您的个人身份或与您有关时,或我们将无法与任何特定用户建立联系的数据与其他您的用户信息结合使用时,则在结合使用期间,这些信息将作为您的用户信息按照本隐私政策处理与保护。**需要澄清的是,个人信息不包括经匿名化处理后的信息。**

**1.1.1 您注册、认证、登录本平台账号时**

如遇其他问题,请点击[硅基流动MaaS线上需求收集表](https://m09tqret04o.feishu.cn/share/base/form/shrcnaamvQ4C7YKMixVnS32k1G3)反馈。

# 流式输出

Source: https://docs.siliconflow.cn/cn/faqs/stream-mode

## 1. 在 python 中使用流式输出

### 1.1 基于 OpenAI 库的流式输出

在一般场景中,推荐您使用 OpenAI 的库进行流式输出。

```python

from openai import OpenAI

client = OpenAI(

base_url='https://api.siliconflow.cn/v1',

api_key='your-api-key'

)

# 发送带有流式输出的请求

response = client.chat.completions.create(

model="deepseek-ai/DeepSeek-V2.5",

messages=[

{"role": "user", "content": "SiliconCloud公测上线,每用户送3亿token 解锁开源大模型创新能力。对于整个大模型应用领域带来哪些改变?"}

],

stream=True # 启用流式输出

)

# 逐步接收并处理响应

for chunk in response:

if not chunk.choices:

continue

if chunk.choices[0].delta.content:

print(chunk.choices[0].delta.content, end="", flush=True)

if chunk.choices[0].delta.reasoning_content:

print(chunk.choices[0].delta.reasoning_content, end="", flush=True)

```

### 1.2 基于 requests 库的流式输出

如果您有非 openai 的场景,如您需要基于 request 库使用 siliconcloud API,请您注意:

除了 payload 中的 stream 需要设置外,request 请求的参数也需要设置stream = True, 才能正常按照 stream 模式进行返回。

```python

from openai import OpenAI

import requests

import json

url = "https://api.siliconflow.cn/v1/chat/completions"

payload = {

"model": "deepseek-ai/DeepSeek-V2.5", # 替换成你的模型

"messages": [

{

"role": "user",

"content": "SiliconCloud公测上线,每用户送3亿token 解锁开源大模型创新能力。对于整个大模型应用领域带来哪些改变?"

}

],

"stream": True # 此处需要设置为stream模式

}

headers = {

"accept": "application/json",

"content-type": "application/json",

"authorization": "Bearer your-api-key"

}

response = requests.post(url, json=payload, headers=headers, stream=True) # 此处request需要指定stream模式

# 打印流式返回信息

if response.status_code == 200:

full_content = ""

full_reasoning_content = ""

for chunk in response.iter_lines():

if chunk:

chunk_str = chunk.decode('utf-8').replace('data: ', '')

if chunk_str != "[DONE]":

chunk_data = json.loads(chunk_str)

delta = chunk_data['choices'][0].get('delta', {})

content = delta.get('content', '')

reasoning_content = delta.get('reasoning_content', '')

if content:

print(content, end="", flush=True)

full_content += content

if reasoning_content:

print(reasoning_content, end="", flush=True)

full_reasoning_content += reasoning_content

else:

print(f"请求失败,状态码:{response.status_code}")

```

## 2. curl 中使用流式输出

curl 命令的处理机制默认情况下,curl 会缓冲输出流,所以即使服务器分块(chunk)发送数据,也需要等缓冲区填满或连接关闭后才看到内容。传入 `-N`(或 `--no-buffer`)选项,可以禁止此缓冲,让数据块立即打印到终端,从而实现流式输出。

```bash

curl -N -s \

--request POST \

--url https://api.siliconflow.cn/v1/chat/completions \

--header 'Authorization: Bearer token' \

--header 'Content-Type: application/json' \

--data '{

"model": "Qwen/Qwen2.5-72B-Instruct",

"messages": [

{"role":"user","content":"有诺贝尔数学奖吗?"}

],

"stream": true

}'

```

# 主体变更协议

Source: https://docs.siliconflow.cn/cn/legals/agreement-for-account-ownership-transfer

更新日期:2025年2月12日

在您正式提交账号主体变更申请前,请您务必认真阅读本协议。**本协议将通过加粗或加下划线的形式提示您特别关注对您的权利及义务将产生重要影响的相应条款。** 如果您对本协议的条款有疑问的,请向[contact@siliconflow.cn](mailto:contact@siliconflow.cn)咨询,如果您不同意本协议的内容,或者无法准确理解Siliconcloud平台(本平台)对条款的解释,请不要进行后续操作。

当您通过网络页面直接确认、接受引用本页面链接及提示遵守内容、签署书面协议、以及本平台认可的其他方式,或以其他法律法规或惯例认可的方式选择接受本协议,即表示您与本平台已达成协议,并同意接受本协议的全部约定内容。自本协议约定的生效之日起,本协议对您具有法律约束力。

**请您务必在接受本协议,且确信通过账号主体变更的操作,能够实现您所希望的目的,且您能够接受因本次变更行为的相关后果与责任后,再进行后续操作。**

## 一、定义和解释

1.1 “本平台官网”:是指包含域名为[https://siliconflow.cn的网站。](https://siliconflow.cn的网站。)

1.2 “本平台账号”:是指本平台分配给注册用户的数字ID,以下简称为“本平台账号”、“账号”。

1.3 “本平台账号持有人”,是指注册、持有并使用本平台账号的用户。

已完成实名认证的账号,除有相反证据外,本平台将根据用户的实名认证信息来确定账号持有人,如用户填写信息与实名认证主体信息不同的,以实名认证信息为准;未完成实名认证的账号,本平台将根据用户的填写信息,结合其他相关因素合理判断账号持有人。

1.4 “账号实名认证主体变更”:是指某一本平台账号的实名认证主体(原主体),变更为另一实名认证主体(新主体),本协议中简称为“账号主体变更”。

1.5 本协议下的“账号主体变更”程序、后果,仅适用于依据账号原主体申请发起、且被账号新主体接受的本平台账号实名认证主体变更情形。

## 二. 协议主体、内容与生效

**2.1** 本协议是特定本平台账号的账号持有人(“您”、“原主体”)与本平台之间,就您申请将双方之前就本次申请主体变更的本平台账号所达成的《SiliconCloud平台产品用户协议》的权利义务转让给第三方,及相关事宜所达成的一致条款。

**2.2 本协议为附生效条件的协议,仅在以下四个条件同时满足的情况下,** 才对您及本平台产生法律效力:

2.2.1 您所申请变更的本平台账号已完成了实名认证,且您为该实名认证主体;

2.2.2 本平台审核且同意您的账号主体变更申请;

2.2.3 您申请将账号下权利义务转让给第三方(“新主体”),且其同意按照《SiliconCloud平台产品用户协议》的约定继续履行相应的权利及义务;

2.2.4 新主体仅为企业主体,不能为自然人主体。

**2.3 您与新主体就账号下所有的产品、服务、资金、债权、债务等(统称为“账号下资源”)转让等相关事项,由您与新主体之间另外自行约定。但如果您与新主体之间的约定如与本协议约定冲突的,应优先适用本协议的约定。**

## 三. 变更的条件及程序

**3.1** 本平台仅接受符合以下条件下的账号主体变更申请;

3.1.1 由于原账号主体发生合并、分立、重组、解散、死亡等原因,需要进行账号主体变更的;

3.1.2 根据生效判决、裁定、裁决、决定等生效法律文书,需要账号主体变更的;

3.1.3 账号实际持有人与账号实名认证主体不一致,且提供了明确证明的;

3.1.4 根据法律法规规定,应当进行账号主体变更的;

3.1.5 本平台经过审慎判断,认为可以进行账号主体变更的其他情形。

**3.2** 您发起账号主体变更,应遵循如下程序要求:

3.2.1 您应在申请变更的本平台账号下发起账号主体变更申请;

3.2.2 本平台有权通过手机号、人脸识别等进行二次验证、要求您出具授权证明(当您通过账号管理人发起变更申请时)、以及其他本平台认为有必要的材料,确认本次申请账号主体变更的行为确系您本人意愿;

3.2.3 您应同意本协议的约定,接受本协议对您具有法律约束力;

3.2.4 您应遵守与账号主体变更相关的其他本平台规则、制度等的要求。

**3.3 您理解并同意,**

**3.3.1 在新主体确认接受且完成实名认证前,您可以撤回、取消本账号主体变更流程;**

**3.3.2 当新主体确认接受且完成实名认证后,您的撤销或取消请求本平台将不予支持;**

**3.3.3 且您有义务配合新主体完成账号管理权的转交。**

**3.3.4 在您进行实名认证主体变更期间,本账号下的登录和操作行为均视为您的行为,您应注意和承担账号的操作风险。**

**3.4 您理解并同意,如果发现以下任一情形的,本平台有权随时终止账号主体变更程序或采取相应处理措施:**

**3.4.1 第三方对该账号发起投诉,且尚未处理完毕的;**

**3.4.2 该账号正处于国家主管部门的调查中;**

**3.4.3 该账号正处于诉讼、仲裁或其他法律程序中;**

**3.4.4 该账号下存在与本平台的信控关系、伙伴关系等与原主体身份关联的合作关系的;**

**3.4.5 存在其他可能损害国家、社会利益,或者损害本平台或其他第三方权利的情形的;**

**3.4.6 该账号因存在频繁变更引起的账号纠纷或账号归属不明确的情形。**

## 四. 账号主体变更的影响

**4.1** 当您的账号主体变更申请经本平台同意,且新主体确认并完成实名认证后,该账号主体将完成变更,变更成功以本平台系统记录为准,变更成功后会对您产生如下后果:

**4.1.1您本账号下的权益转让给变更后的实名主体,权益包括不限于账号控制权、账号下已开通的服务、账号下未消耗的充值金额等;**

4.1.2 该账号及该账号下的全部资源的归属权全部转由新主体拥有。**但本平台有权终止,原主体通过该账号与本平台另行达成的关于优惠政策、信控、伙伴合作等相关事项的合作协议,或与其他本平台账号之间存在的关联关系等;**

**4.1.3 本平台不接受您以和新主体之间的协议为由或以其他理由,要求将该账号下一项或多项业务、权益转移给您指定的其他账号的要求;**

**4.1.4 本平台有权拒绝您以和新主体之间存在纠纷为由或以其他理由,要求撤销该账号主体变更的请求;**

**4.1.5 本平台有权在您与新主体之间就账号管理权发生争议或纠纷时,采取相应措施使得新主体获得该账号的实际管理权。**

**4.2 您理解并确认,账号主体变更并不代表您自变更之时起已对该账号下的所有行为和责任得到豁免或减轻:**

**4.2.1 您仍应对账号主体变更前,该账号下发生的所有行为承担责任;**

**4.2.2 您还需要对于变更之前已经产生,变更之后继续履行的合同及其他事项,对新主体在变更之后的履行行为及后果承担连带责任。**

## 五. 双方权利与义务

**5.1** 您应承诺并保证,

5.1.1 您在账号主体变更流程中所填写的内容及提交的资料均真实、准确、有效,且不存在任何误导或可能误导本平台同意接受该项账号主体变更申请的行为;

5.1.2 您不存在利用本平台的账号主体变更服务进行任何违反法律、法规、部门规章和国家政策等,或侵害任何第三方权利的行为;

**5.1.3 您进行账号主体变更的操作不会置本平台于违约或者违法的境地。因该账号主体变更行为而产生的任何纠纷、争议、损失、侵权、违约责任等,本平台不承担法律明确规定外的责任。**

**您进一步承诺,如上述原因给本平台造成损失的,您应向本平台承担相应赔偿责任。**

**5.2** 您理解并同意,

5.2.1 本平台有权在您发起申请后的任一时刻,要求您提供书面材料或其他证明,证明您有权进行变更账号主体的操作;

5.2.2 本平台有权依据自己谨慎的判断来确定您的申请是否符合法律法规或政策的规定及账号协议的约定,如存在违法违规或其他不适宜变更的情形的,本平台有权拒绝;

5.2.3 本平台有权记录账号实名认证主体变更前后的账号主体、交易流水、合同等相关信息,以遵守法律法规的规定,以及维护自身的合法权益;

5.2.4 如果您存在违反本协议第5.1条的行为的,本平台一经发现,有权直接终止账号主体变更流程,或者撤销已完成的账号主体变更操作,将账号主体恢复为没有进行变更前的状态。

## 六. 附则

**6.1** 您理解并接受,本协议的订立、执行和解释及争议的解决均应适用中华人民共和国法律,与法律规定不一致或存在冲突的,该不一致或冲突条款不具有法律约束力。

**6.2** 就本协议内容或其执行发生任何争议,双方应进行友好协商;协商不成时,任一方均可向被告方所在地有管辖权的人民法院提起诉讼。

**6.3** 本协议如果与双方以前签署的有关条款或者本平台的有关陈述不一致或者相抵触的,以本协议约定为准。

**您在此再次保证已经完全阅读并理解了上述《申请账号主体变更协议》,并自愿正式进入账号主体变更的后续流程,接受上述条款的约束。**

# 隐私政策

Source: https://docs.siliconflow.cn/cn/legals/privacy-policy

更新日期:2025年02月27日

欢迎您使用SiliconCloud高性价比的GenAI开放平台。北京硅基流动科技有限公司及其关联方(**“硅基流动”或“我们”**)非常重视用户(**“您”**)信息的保护。您在注册、登录、使用[https://siliconflow.cn](https://siliconflow.cn) (**“本平台”**)时,我们收集并保存您基于注册和正常使用本平台功能所需的相关**用户信息**(定义见 1.1)。我们并不收集或保存您使用本平台过程中与开源模型、第三方网站、软件、应用程序或服务之间的**交互数据**(定义见 1.4.1)“用户信息”和“交互数据”的核心差异对比见脚注1。

如我们的服务可能包含指向第三方网站、应用程序或服务的链接,本政策不适用于第三方提供的任何产品、服务、网站或内容。如您(或您的公司等经营实体)借助我们的产品或服务为您(或您的公司等经营实体)的客户提供服务,您(或您的公司等经营实体)需要自行制定符合交易场景及相关法律的隐私政策。如您(或您的公司等经营实体)同意借用我们的产品或服务访问第三方网站、应用程序或服务的链接,您(或您的公司等经营实体)需要自行遵守第三方网站、应用程序或服务的相关政策。

我们希望通过本隐私政策向您介绍我们对您的用户信息和交互数据的处理方式。在您开始使用我们的服务前,请您务必先仔细阅读和理解本政策,特别应重点阅读我们以粗体标识的条款,确保您充分理解和同意后再开始使用。如果您不同意本隐私政策,您应当立即停止使用服务。当您选择使用服务时,将视为您接受和认可我们按照本隐私政策对您的相关信息进行处理。

# 概述

本隐私政策将帮助您了解:

1. 我们如何收集和使用您的用户信息

2. 对Cookie和同类技术的使用

3. 我们如何存储您的用户信息

4. 我们如何共享、转让、公开披露您的信息

5. 我们如何保护您的信息安全

6. 我们如何管理您的用户信息

7. 未成年人使用条款

8. 隐私政策的修订和通知

9. 适用范围

## 1. 我们如何收集和使用您的用户信息

### 1.1 我们主动收集您的用户信息

为了保证您正常使用我们的平台,且在法律允许的情况下,我们会在如下场景和业务活动中收集您在使用服务时主动提供的信息,以及我们通过自动化手段收集您在使用服务过程中产生的信息,包括但不限于您提供的个人信息(**“用户信息”**)。**特别提示您注意,如信息无法单独或结合其他信息识别到您的个人身份且与您无关,其不属于法律意义上您的个人信息**;当您的信息可以单独或结合其他信息识别到您的个人身份或与您有关时,或我们将无法与任何特定用户建立联系的数据与其他您的用户信息结合使用时,则在结合使用期间,这些信息将作为您的用户信息按照本隐私政策处理与保护。**需要澄清的是,个人信息不包括经匿名化处理后的信息。**

**1.1.1 您注册、认证、登录本平台账号时**

当您在本平台注册账号时,您可以通过手机号创建账号。我们将通过发送短信验证码来验证您的身份是否有效,收集这些信息是为了帮助您完成注册和登录。 如果您使用其他平台的账号登录本平台,您授权我们获得您其他平台账号的相关信息,例如微信等第三方平台的账户/账号信息(包括但不限于名称、头像以及您授权的其他信息)。

**1.1.2 您订购及开通使用服务时**

在您订购或开通使用我们提供的任一项服务前,根据中华人民共和国大陆地区(不含中国香港、澳门、台湾地区)相关法律法规的规定,我们需要对您进行实名认证。

(1) 如果您是个人用户,您可能需要提供您的真实身份信息,包括真实姓名、身份证件号码、身份证件正反面照片、中国银联成员机构I类银行账户卡号或 信用卡 号、银行预留手机号码等信息以完成实名认证。

(2) 如果您是单位用户,您可能需要提供您的相关信息,包括您的主体名称、统一社会信用代码、就注册账号所出具的授权委托书(加盖单位公章)、法人或组织的登记/资格证明(证件类型包括:企业营业执照、组织机构代码证、事业单位法人证书、社会团体法人登记证书、行政执法主体资格证等)、开户行、开户行账号(中国银联成员机构I类银行账户或信用卡号)、法定代表人或被授权人姓名、身份证件号码、身份证件正反面照片、法定代表人证件照片等信息以完成实名认证。另外,您可能需要提供企业联系人的个人信息,包括姓名、手机号码、电子邮箱。我们可能通过这些信息验证您的用户身份,并向您推广、介绍服务,发送业务通知、开具发票或与您进行业务沟通等。如您提供的上述信息包含第三方的个人信息或用户信息,您承诺并保证您向我们提供这些信息前已经获得了相关权利人的授权许可。

(3) 上述实名认证过程中,如果您通过人脸识别来进行实名认证的,您还需要提供面部特征的生物识别信息,并授权我们通过国家权威可信身份认证机构进行信息核验。

**1.1.3 您使用服务时**

(1) 我们致力于为您提供安全、可信的产品与使用环境,提供优质而可靠的服务与信息是我们的核心目标。为了维护我们服务的正常运行,保护您或其他用户或公众的合法利益免受损失,我们会收集用于维护产品或服务安全稳定运行的必要信息。

(2) 当您浏览或使用本平台时,为了保障网站和服务的正常运行及运营安全,预防网络攻击、侵入风险,更准确地识别违反法律法规或本平台的相关协议、服务规则的情况,我们会收集您的分辨率、时区和语言等设备信息、网络接入方式及类型信息、网页浏览记录。这些信息是我们提供服务和保障服务正常运行和网络安全所必须收集的基本信息。

(3) 为让您体验到更好的服务,并保障您的使用安全,我们可能记录网络日志信息,以及使用本平台及相关服务的频率、崩溃数据、使用情况及相关性能数据信息。

(4) 当您参与本平台调研、抽奖活动时,本平台可能会留存您的账号ID、姓名、地址、手机号、职务身份、产品和服务使用情况等信息,以后续与您取得联系,核实身份信息,并按照有关活动规则为您提供奖励(如有),具体调研、抽奖活动规则与本协议不一致的,以活动规则为准。

(5) 我们可能收集您使用本平台及相关服务而产生的用户咨询记录、报障记录和针对用户故障的排障过程(如通信或通话记录),我们将通过记录、分析这些信息以便更及时响应您的帮助请求,以及用于改进服务。

(6) 合同信息,如果您需要申请线下交付或进行产品测试等,请联系[contact@siliconflow.cn](mailto:contact@siliconflow.cn)。

(7) 为了向您提供域名服务,依据工信部相关要求,我们会收集域名持有者名称、联系人姓名、通讯地址、地区、邮编、电子邮箱、固定电话/手机号码、证件号码、证件照片。您理解并授权我们和第三方域名服务机构使用这些信息资料为您提供域名服务。

(8) 为了向您提供证书中心服务,依据数字证书认证机构相关要求,我们会收集联系人姓名、联系人邮箱、联系人手机号码、企业名称、企业所在城市、企业地址。您理解并授权我们和第三方证书服务机构使用这些信息资料为您提供证书中心服务。

(9) 您知悉并同意,对于您在使用产品及/或服务的过程中提供的您的联系方式(即联系电话及电子邮箱),我们在运营中可能会向其中的一种或多种发送通知,用于用户消息告知、身份验证、安全验证、用户使用体验调研等用途;此外,我们也可能会向在前述过程中收集的手机号码通过短信、电话的方式,为您提供您可能感兴趣的服务、功能或活动等商业性信息的用途,但请您放心,如您不愿接受这些信息,您可以通过手机短信或回复邮件退订方式进行退订,也可以直接与我们联系进行退订。

### 1.2 我们可能从第三方获得的用户信息

为了给您提供更好、更优、更加个性化的服务,或共同为您提供服务,或为了预防互联网欺诈的目的,我们的关联公司、合作伙伴可能会依据法律规定或与您的约定或征得您同意前提下,向我们分享您的信息。我们会根据相关法律法规规定和/或该身份认证功能所必需,采用行业内通行的方式及尽最大的商业努力来保护您用户信息的安全。

### 1.3 收集、使用用户信息目的变更的处理

请您了解,随着我们业务的发展,我们提供的功能或服务可能有所调整变化。原则上,当新功能或服务与我们当前提供的功能或服务相关时,收集与使用的用户信息将与原处理目的具有直接或合理关联。仅在与原处理目的无直接或合理关联的场景下,我们收集、使用您的用户信息,会再次按照法律法规及国家标准的要求以页面提示、交互流程、协议确认方式另行向您进行告知说明。

### 1.4 业务和客户数据

请您理解,您的业务和客户数据不同于用户信息,本平台将按如下方式处理:

**1.4.1** 您通过本平台提供的服务(不包括第三方服务)与开源模型、第三方网站、软件、应用程序或服务之间进行输入、反馈、修正、加工、存储、上传、下载、分发以及通过其他方式所产生或处理(**“处理”**)的数据,均为您的业务和客户数据(**“交互数据”**),您完全拥有您的交互数据。本平台作为中立的技术服务提供者,本平台提供的技术服务只会严格执行您的指示处理您的交互数据,除非法律法规另有规定、依据特定产品规则另行约定或基于您的要求为您提供技术协助进行故障排除或解决技术问题,我们不会访问您的交互数据,亦不会对您的交互数据进行任何非授权的使用或披露。

**1.4.2** 您应对您的交互数据来源及内容负责,我们提示您谨慎判断数据来源及内容的合法性。您应保证有权授权本平台通过提供技术服务对该等交互数据进行处理,且前述处理活动均符合相关法律法规的要求,不存在任何违法违规、侵权或违反与第三方合同约定的情形,亦不会将数据用于违法违规目的。若因您的交互数据内容或您处理以及您授权本平台处理交互数据行为违反法律法规、部门规章或国家政策而造成的全部结果及责任均由您自行承担。

**1.4.3** 受限于前款规定,若您提供的交互数据包含任何个人信息或需要获得授权后才能使用的信息时(**“需授权信息”**),您应当自行依法提前向相关信息主体履行告知义务,并取得相关信息主体的单独同意,并保证:

(1)在我们要求时,您能就需授权信息的来源及其合法性提供书面说明和确认;

(2)在我们要求时,您能提供需授权信息的授权范围,包括使用目的,个人信息主体同意您使用本平台对其个人信息进行处理;如您超出该等需授权信息的授权范围或期限时,您应自行负责获得需授权信息授权的范围扩大或延期。

您理解并同意,除非满足上述条件及法律要求的其他义务,您不得向我们提供包含需授权信息的交互数据。

如您违反上述义务,或未按照要求向我们提供合理满意的证明,或我们收到个人信息主体举报或投诉,我们有权单方决定拒绝继续提供技术服务(根据实际情形,包括通过限制相关服务功能,冻结、注销或收回账号等方式),或拒绝按照您的指令处理相关个人信息及其相关的数据,由此产生的全部责任均由您自行承担。

**1.4.4** 您理解并同意,您应根据自身需求自行对您的交互数据进行存储,我们仅依据相关法律法规要求或特定的服务规则约定,提供数据存储服务(例如:您和我们的数据中心就存储您的业务和客户数据有专项约定)。您理解并同意,除非法律法规另有规定或依据服务规则约定,我们没有义务存储您的数据或信息,亦不对您的数据存储工作或结果承担任何责任。

## 2. 对Cookie和同类技术的使用

Cookie和同类技术是互联网中的通用常用技术。当您使用本平台时,我们可能会使用相关技术向您的设备发送一个或多个Cookie或匿名标识符,以收集和存储您的账号信息、搜索记录信息以及登录状态信息。通过Cookie和同类技术可以帮助您省去重复填写账号信息、输入搜索内容的步骤和流程,还可以帮助我们改进服务效率、提升登录和响应速度。

您可以通过浏览器设置拒绝或管理Cookie。但请注意,如果停用Cookie,您有可能无法享受最佳的服务体验,某些功能的可用性可能会受到影响。我们承诺,我们不会将通过Cookie或同类技术收集到的您的用户信息用于本隐私政策所述目的之外的任何其他用途。

## 3. 我们如何存储您的用户信息

### 3.1 信息存储的地点

出于服务专业性考虑,我们可能委托关联公司或其他法律主体向您提供本平台上一项或多项具体服务。我们依照法律法规的规定,将在境内运营本网站和相关服务过程中收集和产生的用户信息存储于中华人民共和国境内。

### 3.2 信息存储的期限

**3.2.1** 我们将仅在为提供本平台及相关服务之目的所必需的期间内保留您的用户信息,但您理解并认可基于不同的服务及其功能需求,必要存储期限可能会有所不同。我们用于确定存储期限的标准包括:

(1) 完成该业务目的需要留存用户信息的时间,包括提供服务,依据法律要求维护相应的交易及业务记录,保证系统和服务的安全,应对可能的用户查询或投诉、问题定位等;

(2) 用户同意的更长的留存期间;

(3) 法律、合同等对保留用户信息的特殊要求等。

**3.2.2** 在您未撤回授权同意、删除或注销账号期间,我们会保留相关信息。超出必要期限后,我们将对您的信息进行删除或匿名化处理,**前述情况若法律法规有强制留存要求的情况下,即使您注销您的账户或要求删除您的用户信息,我们亦无法删除或匿名化处理您的用户信息。**

## 4.我们如何共享、转让、公开披露您的信息

### 4.1 数据使用过程中涉及的合作方

**4.1.1** 原则

(1) 合法原则:与合作方合作过程中涉及数据使用活动的,必须具有合法目的、符合法定的合法性基础。如果合作方使用信息不再符合合法原则,则其不应再使用您的用户信息,或在获得相应合作性基础后再行使用。

(2) 正当与最小必要原则:数据使用必须具有正当目的,且应以达成目的必要为限。

(3) 安全审慎原则:我们将审慎评估合作方使用数据的目的,对这些合作方的安全保障能力进行综合评估,并要求其遵循合作法律协议。我们会对合作方获取信息的软件工具开发包(SDK)、应用程序接口(API)进行严格的安全监测,以保护数据安全。

**4.1.2** 委托处理

对于委托处理用户信息的场景,我们会与受托合作方根据法律规定签署相关处理协议,并对其用户信息使用活动进行监督。

**4.1.3** 共同处理

对于共同处理用户信息的场景,我们会与合作方根据法律规定签署相关协议并约定各自的权利和义务,确保在使用相关用户信息的过程中遵守法律的相关规定、保护数据安全。

**4.1.4** 合作方的范围

若具体功能和场景中涉及由我们的关联方、第三方提供服务,则合作方范围包括我们的关联方与第三方。

### 4.2 用户信息共同数据处理或数据委托处理的情形

**4.2.1** 本平台及相关服务功能中的某些具体模块或功能由合作方提供,对此您理解并同意,在我们与任何合作方合作中,我们仅会基于合法、正当、必要及安全审慎原则,在为提供服务所最小的范围内向其提供您的用户信息,并且我们将努力确保合作方在使用您的信息时遵守本隐私政策及我们要求其遵守的其他适当的保密和安全措施,承诺不得将您的信息用于其他任何用途。

**4.2.2** 为提供更好的服务,我们可能委托合作方向您提供服务,包括但不限于客户服务、支付功能、实名认证、技术服务等,因此,为向您提供服务所必需,我们会向合作方提供您的某些信息。例如:

(1) 为进行用户实名认证在您使用身份认证的功能或相关服务所需时,根据相关法律法规的规定及相关安全保障要求可能需要完成实名认证以验证您的身份。在实名认证过程中,与我们合作的认证服务机构可能需要使用您的真实姓名、身份证号码、手机号码等。

(2) 支付功能由与我们合作的第三方支付机构向您提供服务。第三方支付机构为提供功能可能使用您的姓名、银行卡类型及卡号、有效期、身份证号码及手机号码等。

(3) 为及时处理您的投诉、建议以及其他诉求,我们的客户服务提供商(若有)可能需要使用您的账号及所涉及的事件的相关信息,以及时了解、处理和相应相关问题。

**4.2.3** 为保障服务安全与分析统计的数据使用

(1) 保障使用安全:我们非常重视账号与服务安全,为保障您和其他用户的账号与财产安全,使您和我们的正当合法权益免受不法侵害,我们和我们的合作方可能需要使用必要的设备、账号及日志信息。

(2) 分析服务使用情况:为分析我们服务的使用情况,提升用户使用的体验,我们和我们的合作方可能需要使用您的服务使用情况(崩溃、闪退)的统计性数据,这些数据难以与其他信息结合识别您的身份或与您的身份相关联。

### 4.3 用户信息的转移

我们不会转移您的用户信息给任何其他第三方,但以下情形除外:

**4.3.1** 基于您的书面请求,并符合国家网信部门规定条件的,我们会向您提供转移的途径。

**4.3.2** 获得您的明确同意后,我们会向其他第三方转移您的用户信息。

**4.3.3** 在涉及本平台运营主体变更、合并、收购或破产清算时,如涉及到用户信息转移,我们会依法向您告知有关情况,并要求新的持有您的信息的公司、组织继续接受本隐私政策的约束或按照不低于本隐私政策所要求的安全标准继续保护您的信息,否则我们将要求该公司、组织重新向您征求授权同意。如发生破产且无数据承接方的,我们将对您的信息做删除处理。

### 4.4 用户信息的公开披露

**4.4.1** 原则上我们不会公开披露您的用户信息,除非获得您明确同意或遵循国家法律法规规定的披露。

(1) 获得您明确同意或基于您的主动选择,我们可能会公开披露您的用户信息;

(2) 为保护您或公众的人身财产安全免遭侵害,我们可能根据适用的法律或本平台相关协议、规则披露关于您的用户信息。

**4.4.2** 征得授权同意的例外

请您理解,在下列情形中,根据法律法规及相关国家标准,我们收集和使用您的用户信息不必事先征得您的授权同意:

(1) 与我们履行法律法规规定的义务相关的;

(2) 与国家安全、国防安全直接相关的;

(3) 与公共安全、公共卫生、重大公共利益直接相关的;

(4) 与刑事侦查、起诉、审判和判决执行等直接相关的;

(5) 出于维护您或他人的生命、财产等重大合法权益但又很难得到本人授权同意的;

(6) 您自行向社会公众公开的信息;

(7) 根据用户信息主体要求签订和履行合同所必需的;

(8) 从合法公开披露的信息中收集的您的信息的,如合法的新闻报道、政府信息公开等渠道;

(9) 用于维护软件及相关服务的安全稳定运行所必需的,例如发现、处置软件及相关服务的故障;

(10) 为开展合法的新闻报道所必需的;

(11) 为学术研究机构,基于公共利益开展统计或学术研究所必要,且对外提供学术研究或描述的结果时,对结果中所包含的个人信息进行去标识化处理的;

(12) 法律法规规定的其他情形。

特别提示您注意,如信息无法单独或结合其他信息识别到您的个人身份,其不属于法律意义上您的个人信息;当您的信息可以单独或结合其他信息识别到您的个人身份时,这些信息在结合使用期间,将作为您的用户信息按照本隐私政策处理与保护。

## 5. 我们如何保护您的信息安全

我们非常重视用户信息的安全,将努力采取合理的安全措施(包括技术方面和管理方面)来保护您的信息,防止您提供的信息被不当使用或未经授权的情况下被访问、公开披露、使用、修改、损坏、丢失或泄漏。 我们会使用不低于行业通行的加密技术、匿名化处理等合理可行的手段保护您的信息,并使用安全保护机制尽可能地降低您的信息遭到恶意攻击的可能性。

我们会有专门的人员和制度保障您的信息安全。我们采取严格的数据使用和访问制度。尽管已经采取了上述合理有效措施,并已经遵守了相关法律规定要求的标准,但请您理解,由于技术的限制以及可能存在的各种恶意手段,在互联网行业,即便竭尽所能加强安全措施,也不可能始终保证信息百分之百的安全,我们将尽力确保您提供给我们的信息的安全性。您知悉并理解,您接入我们的服务所用的系统和通讯网络,有可能因我们可控范围外的因素而出现问题。因此,我们强烈建议您采取积极措施保护用户信息的安全,包括但不限于使用复杂密码、定期修改密码、不将自己的账号密码等信息透露给他人。

我们会制定应急处理预案,并在发生用户信息安全事件时立即启动应急预案,努力阻止该等安全事件的影响和后果扩大。一旦发生用户信息安全事件(泄露、丢失等)后,我们将按照法律法规的要求,及时向您告知:安全事件的基本情况和可能的影响、我们已经采取或将要采取的处置措施、您可自主防范和降低风险的建议、对您的补救措施等。我们将及时将事件相关情况以推送通知、邮件、信函或短信等形式告知您,难以逐一告知时,我们会采取合理、有效的方式发布公告。同时,我们还将按照相关监管部门要求,上报用户信息安全事件的处置情况。

我们谨此特别提醒您,本隐私政策提供的用户信息保护措施仅适用于本平台及相关服务。一旦您离开本平台及相关服务,浏览或使用其他网站、产品、服务及内容资源,我们即没有能力及义务保护您在本平台及相关服务之外的软件、网站提交的任何信息,无论您登录、浏览或使用上述软件、网站是否基于本平台的链接或引导。

## 6. 我们如何管理您的用户信息

我们非常重视对用户信息的管理,并依法保护您对于您信息的查阅、复制、更正、补充、删除以及撤回授权同意、注销账号、投诉举报等权利,以使您有能力保障您的隐私和信息安全。

### 6.1 您在用户信息处理活动中的权利

**6.1.1** 一般情况下,您可以查阅、复制、更正、补充、访问、修改、删除您主动提供的用户信息。

**6.1.2** 在以下情形中,您可以向我们提出删除用户信息的请求:

(1) 如果处理目的已实现、无法实现或者为实现处理目的不再必要;

(2) 如果我们处理用户信息的行为违反法律法规;

(3) 如果我们收集、使用您的用户信息,却未征得您的同意;

(4) 如果我们处理用户信息的行为违反了与您的约定;

(5) 如果我们停止提供产品或者服务,或用户信息的保存期限已届满;

(6) 如果您撤回同意授权;

(7) 如果我们不再为您提供服务;

(8) 法律、行政法规规定的其他情形。

### 6.2 撤回或改变您授权同意的范围

**6.2.1** 您理解并同意,每项服务均需要一些基本用户信息方得以完成。除为实现业务功能收集的基本用户信息外,对于额外收集的用户信息的收集和使用,您可以选择撤回您的授权,或改变您的授权范围。您也可以通过注销账号的方式,撤回我们继续收集您用户信息的全部授权。

**6.2.2** 您理解并同意,当您撤回同意或授权后,将无法继续使用与撤回的同意或授权所对应的服务,且本平台也不再处理您相应的用户信息。但您撤回同意或授权的决定,不会影响此前基于您的授权而开展的用户信息处理。

### 6.3 如何获取您的用户信息副本

我们将根据您的书面请求,为您提供以下类型的用户信息副本:您的基本资料、用户身份信息。但请注意,我们为您提供的信息副本仅以我们直接收集的信息为限。

### 6.4 响应您的请求

您享有注销账号、举报或投诉的权利。为保障安全,您可能需要提供书面请求,并以其它方式证明您的身份。 对于您合理的请求,我们原则上不收取费用。但对多次重复、超出合理限度的请求,我们将视具体情形收取一定成本费用。对于那些无端重复、需要过多技术手段(例如,需要开发新系统或从根本上改变现行惯例)、给他人合法权益带来风险或者不切实际(例如,涉及备份磁带上存放的信息)的请求,我们可能会予以拒绝。

您理解并认可,在以下情形中,我们将无法响应您的请求:

(1) 与我们履行法律法规规定的义务相关的;

(2) 与国家安全、国防安全直接相关的;

(3) 与公共安全、公共卫生、重大公共利益直接相关的;

(4) 与刑事侦查、起诉、审判和执行判决等直接相关的;

(5) 我们有充分证据表明用户信息主体存在主观恶意或滥用权利的;

(6) 出于维护用户信息主体或其他个人的生命、财产等重大合法权益但又很难得到本人同意的;

(7) 响应用户信息主体的请求将导致用户信息主体或其他个人、组织的合法权益受到严重损害的;

(8) 涉及商业秘密的。

### 6.5 停止运营向您告知

如我们停止运营,我们将停止收集您的用户信息,并将停止运营的通知以逐一送达或公告等商业上可行的形式通知您,并对我们所持有的您的用户信息进行删除或匿名化处理。

## 7. 未成年人使用条款

我们的服务主要面向企业或相关组织。未成年人(不满十四周岁)不应创建任何账户或使用本平台及其服务。如果我们发现在未事先获得可证实的监护人同意的情况下提供了用户信息,经未成年人的监护人书面告知后我们会设法尽快删除相关信息。

## 8. 隐私政策的修订和通知

为了给您提供更好的服务,本平台及相关服务将不时更新与变化,我们会适时对本隐私政策进行修订,该等修订构成本隐私政策的一部分并具有等同于本隐私政策的效力。但未经您明确同意,我们不会严重减少您依据当前生效的隐私政策所应享受的权利。

本隐私政策更新后,我们会在本平台公布更新版本,并在更新后的条款生效前通过官方网站公告或其他适当的方式提醒您更新的内容,以便您及时了解本隐私政策的最新版本。如您继续使用本平台及相关服务,视为您同意接受修订后的本隐私政策的全部内容。

对于重大变更,我们还会提供更为显著的通知(包括但不限于电子邮件、短信、系统消息或在浏览页面做特别提示等方式),向您说明本隐私政策的具体变更。

本隐私政策所指的重大变更包括但不限于:

(1) 我们的服务模式发生重大变化。如处理用户信息的目的、处理用户信息的类型、用户信息的使用方式等;

(2) 我们在所有权结构、组织架构等方面发生重大变化。如业务调整、破产并购等引起的所有变更等;

(3) 用户信息传输、转移或公开披露的主要对象发生变化;

(4) 您参与用户信息处理方面的权利及其行使方式发生重大变化;

(5) 我们的联络方式及投诉渠道发生变化时。

## 9. 适用范围

本隐私政策适用于本平台提供产品、服务、解决方案以及公司后续可能不时推出的纳入服务范畴内的其他产品、服务或解决方案。

本隐私政策不适用于有单独的隐私政策且未纳入本隐私政策的第三方通过本平台向您提供的产品或服务(**“第三方服务”**)。您使用这些第三方服务(包括您向这些第三方提供的任何含用户信息在内的信息),将受这些第三方的服务条款及隐私政策约束(而非本隐私政策),并通过其建立的用户信息主体请求和投诉等机制,提出相关请求、投诉举报,具体规定请您仔细阅读第三方的条款。请您妥善保护自己的用户信息,仅在必要的情况下向第三方提供。

需要特别说明的是,作为本平台的用户,若您利用本平台的技术服务,为您的用户再行提供服务,因您与客户的业务合作所产生的数据属于您所有,您应当与您的用户自行约定相关隐私政策,本隐私政策不作为您与您的用户之间的隐私政策的替代。

***

1 ↩

| 核心对比项目 | 用户信息 | 交互数据 |

| ------- | ------------------------------- | -------------------------- |

| 平台使用/披露 | 法律许可且基于提供平台功能的目的,

平台可使用或披露 | 除法律强制要求,平台不会非授权

使用或披露 |

| 存储 | 平台按相关法律要求存储 | 用户应自行存储,平台无义务存储 |

# 用户充值协议

Source: https://docs.siliconflow.cn/cn/legals/recharge-policy

更新日期:2025年3月5日

尊敬的用户,为保障您的合法权益,请您在点击“确认支付”按钮前,完整、仔细地阅读本充值协议,当您点击“确认支付”按钮,即表示您已阅读、理解本协议内容,并同意按照本协议约定的规则进行充值和使用余额行为。如您不接受本协议的部分或全部内容,请您不要点击“确认支付”按钮。

## 1. 接受条款

欢迎您使用SiliconCloud平台。以下所述条款和条件为平台充值的用户(以下简称“用户“或“您“)和北京硅基流动科技有限公司(以下简称“硅基流动”)就充值以及余额使用所达成的协议。

当您以在线点击“确认支付”等方式确认本协议或实际进行充值时,即表示您已理解本协议内容并同意受本协议约束,包括但不限于本协议正文及所有硅基流动已经发布的或将来可能发布的关于服务的各类规则、规范、公告、说明和(或)通知等,以及其他各项网站规则、制度等。所有前述规则为本协议不可分割的组成部分,与协议正文具有同等法律效力。

硅基流动有权根据国家法律法规的变化以及实际业务运营的需要不时修改本协议相关内容,并提前公示于软件系统、网站等以通知用户。修改后的条款应于公示通知指定的日期生效。如果您选择继续充值即表示您同意并接受修改后的协议且受其约束;如果您不同意我们对本协议的修改,请立即放弃充值或者停止使用本服务。

请注意,本协议限制了硅基流动的责任,还限制了您的责任,具体条款将以加粗并加下划线的形式提示您注意,硅基流动督促您仔细阅读。如果您对本协议的条款有疑问的,请通过客服渠道(电子邮箱:[contact@siliconflow.cn](mailto:contact@siliconflow.cn))进行询问,硅基流动将向您解释条款内容。如果您不同意本协议的任意内容,或者无法准确理解硅基流动对条款的解释,请不要同意本协议或使用本协议项下的服务。

## 2. 定义

**2.1** SiliconCloud平台个人充值余额账户:简称“充值余额账户”,指由硅基流动根据用户的SiliconCloud平台账户为用户自动配置的资金账户。用户向该账户充值的行为视为用户向硅基流动预付服务费,预付服务费可用于购买SiliconCloud平台提供的产品或服务。

## 3. 充值条件

当您充值时,您应该具有经实名认证成功后的SiliconCloud平台账户。

## 4. 账户安全

**4.1** 当用户进行充值时,用户应仔细确认自己的账号及信息,若因为用户自身操作不当、不了解或未充分了解充值计费方式等因素造成充错账号、错选充值种类等情形而损害自身权益,应由用户自行承担责任。

**4.2** 用户在充值时使用第三方支付企业提供的服务的,应当遵守与该第三方的各项协议及其服务规则;在使用第三方支付服务过程中用户应当妥善保管个人信息,包括但不限于银行账号、密码、验证码等;用户同意并确认,硅基流动对因第三方支付服务产生的纠纷不承担任何责任。

## 5. 充值方式

**5.1** 用户充值可以选择硅基流动认可的第三方支付企业(目前支持微信支付)支付充值金额。

**5.2** 用户如委托第三方对其消耗账户充值,则用户承诺并保证其了解和信任第三方,且第三方亦了解和同意接受用户委托,为用户充值;否则,如硅基流动被第三方告知该等充值非经第三方同意,则硅基流动有权立即封禁用户的 SiliconCloud 账户(账户封禁期间,硅基流动将暂停用户使用本平台服务,包括不限于用户的 API 请求或任何线上功能,下同)。自用户的消耗账户被封禁之日起30日内,用户应提供充足证据证实第三方事先同意为其充值,否则用户同意并授权硅基流动配合第三方的要求,自用户被锁定的消耗账户中将相应款项退还第三方。如届时用户的消耗账户余额不足以退还,则短缺部分,用户同意最晚在30日内充值相应金额,委托硅基流动退还,或自其微信账户或支付宝账户自行退还,除非第三方同意用户可不退还这部分款项。

**5.3** 用户承诺并保证用于其充值余额账户充值的资金来源的合法性,否则硅基流动有权配合司法机关或其他政府主管机关的要求,对用户的 SiliconCloud 账户进行相应处理,包括但不限于封禁用户的 SiliconCloud 账户等。

## 6. 充值余额和赠送余额

**6.1** 充值余额,是指您进行在线充值并实际支付的金额(人民币),不包括任何形式的赠送金额,可在SiliconCloud 平台消耗使用。

**6.2** 赠送余额,是指根据 SiliconCloud 平台不时推出的充值优惠运营活动,在充值余额以外、额外赠予的金额(包括但不限于邀请奖励等)。赠送余额不可提现、不可转让,不可开具发票。赠送余额将依据对应的活动规则发放并依据平台服务规则使用,硅基流动对赠送金额的可用范围享有最终解释权。

## 7. 充值余额使用

**7.1** 您充值后,充值余额的使用不设有效期,不能转移、转赠。因此,请您根据自己的消耗情况选择充值金额,硅基流动对充值次数不做限制。

**7.2** 充值成功后,通常您可以立即开始使用相应产品(或服务),部分情况下可能存在延迟到账的情况,若较长时间仍未到账您可通过客服渠道(电子邮箱:[contact@siliconflow.cn](mailto:contact@siliconflow.cn))联系硅基流动的服务支持人员为您处理。

## 8. 发票

硅基流动将在您的充值金额消耗后,按照实际消耗金额,根据您订购的产品(或服务)协议开具相应发票。

## 9. 关于退款

**9.1** 您应充分预估实际需求并确定充值金额。

**9.2** 若您使用在线支付方式(目前为微信支付),通过第三方支付企业进行充值,充值完成后 360 日内,对于尚未消费的余额您可自行操作退款。硅基流动及其合作第三方支付企业将对用户的退款操作进行审核,若审核通过将会按照原支付路径退回,并可能产生相应手续费。

**9.3** 原则上,您的每一笔在线支付充值仅支持一次退款。如遇特殊情况,您可通过客服渠道(电子邮箱:[contact@siliconflow.cn](mailto:contact@siliconflow.cn))联系硅基流动的服务支持人员为您处理。

**9.4** 您通过对公转账或其他方式充值的金额,不支持自助退款,您可通过客服渠道(电子邮箱:[contact@siliconflow.cn](mailto:contact@siliconflow.cn))联系硅基流动的服务支持人员,配合提供相关证明材料,硅基流动将在审核完成后将应退金额返回给用户,并可能产生相应手续费。

**9.5** 赠送余额(包括但不限于邀请奖励及同类非现金折扣等)不支持申请退款。

**9.6** 您完成充值并已经消耗的或根据相关产品(或服务)协议应予扣除的,将不予退还。

## 10. 争议解决

本协议适用中华人民共和国大陆地区法律。用户如因本协议与硅基流动发生争议的,双方应首先友好协商解决,如协商不成的,该等争议将由北京市海淀区人民法院管辖。

# 用户协议

Source: https://docs.siliconflow.cn/cn/legals/terms-of-service

更新日期:2025年02月27日

这是您与北京硅基流动科技有限公司及其关联方(**“硅基流动”**或**“我们”**)之间的协议(**“本协议”**),您确认:在您开始试用或购买我们 **SiliconCloud**平台(**本平台**)的产品或服务前,您已充分阅读、理解并接受本协议的全部内容,**一旦您选择“同意”并开始使用本服务或完成购买流程,即表示您同意遵循本协议之所有约定。不具备前述条件的,您应立即终止注册或停止使用本服务**。如您与我们已就您使用本平台服务事宜另行签订其他法律文件,则本协议与该等法律文件冲突的部分对您不适用。**另本平台的详细数据使用政策请见《隐私政策》。**

## 1. 账户管理

**1.1** 您保证自身具有法律规定的完全民事权利能力和民事行为能力,是能够独立承担民事责任的自然人或法人;本协议内容不会被您所属国家或地区的法律禁止。您知悉,**无民事行为能力人、限制民事行为能力人(本平台指十四周岁以下的未成年人)不当注册为平台用户的,其与平台之间的服务协议自始无效,一经发现,平台有权立即停止为该用户服务或注销该用户账号。**

**1.2 账户**

1.2.1 在您按照本平台的要求填写相关信息并确认同意履行本协议的内容后,我们为您注册账户并开通本平台的使用权限,您的账户仅限您本人使用并使您能够访问某些服务和功能,我们可能根据我们的独立判断不时地修改和维护这些服务和功能。

1.2.2 个人可代表公司或其他实体访问和使用本平台,在这种情况下,本协议不仅在我们与该个人之间的产生效力,亦在我们与该等公司或实体之间产生效力。

1.2.3 如果您通过第三方连接/访问本服务,即表明允许我们访问和使用您的信息,并存储您的登录凭据和访问令牌。

1.2.4 账户安全。当您创建帐户时,您有权使用您设置或确认的手机号码及您设置的密码登陆本平台。**我们建议您使用强密码(由大小写字母、数字和符号组合而成的密码)来保护您的帐户。** 您的账户由您自行设置并由您保管,本平台在任何时候均不会主动要求您提供您的账户密码。因此,建议您务必保管好您的账户,**若账户因您主动泄露或因您遭受他人攻击、诈骗等行为导致的损失及后果,本平台不承担责任,您应通过司法、行政等救济途径向侵权行为人追偿。** 您向我们提供您的电子邮件地址作为您的有效联系方式,即表明您同意我们使用该电子邮件地址向您发送相关通知,请您务必及时关注。

1.2.5 账户删除。您应通过本平台指定的方式提交账号注销申请,并按照系统提示填写相关信息,包括但不限于账号ID、注册手机号、注销原因等,以便本平台核实身份。如审核通过,本平台将通过短信向您发送账号注销通知;如审核不通过,本平台将通过短信告知您原因。自账号注销通知送达您之日起,您将无法再登陆并使用该账号,账号相关的所有权益立即终止。账号注销后,本平台将按照法律法规的要求对您在平台上留存的信息进行删除,但法律法规另有规定或双方另有约定的除外。

**1.3** 变更、暂停和终止。**我们**在尽最大努力以本平台公告、站内信、邮件或短信等一种或多种方式进行事先通知的情况下,**我们可以变更、暂停或终止向您提供服务,或对服务设置使用限制,而无需承担责任。可以在任何时候停用您的帐户**。即便您的账户因任何原因而终止后,您将继续受本协议的约束。

**1.4** 在法律有明确规定要求的情况下,本平台作为平台服务提供者若必须对用户的信息进行核实的情况下,本平台将依法不时地对您的信息进行检查核实,您应当配合提供最新、真实、完整、有效的信息。**若本平台无法依据您提供的信息进行核验时,本平台可向您发出询问或要求整改的通知,并要求您进行重新认证,直至中止、终止对您提供部分或全部平台服务,本平台对此不承担任何责任。**

**1.5** 您应当为自己与其他用户之间的交互、互动、交流、沟通负责。我们保留监督您与其他用户之间争议的权利。我们不因您与其他用户之间的互动以及任何用户的作为或不作为而承担任何责任,包括与**交互数据**(定义见下文)相关的责任。

## 2. 访问服务及服务限制

**2.1** 访问服务。在您遵守本协议的前提下,您在此被授予非排他性的、不可转让的访问和使用本服务的权利,仅用于您个人使用或您代表的公司或其他实体内部业务目的。我们保留本协议中未明确授予的所有权利。

**2.2 服务限制**

2.2.1 对服务的任何部分进行反汇编、反向工程、解码或反编译;

2.2.2 将本服务上或通过本服务提供的任何内容(包括任何标题信息、关键词或其他元数据)用于任何机器学习和人工智能培训或开发目的,或用于旨在识别自然人身份的任何技术;

2.2.3 未经我们事先书面同意,购买、出售或转让API密钥;

2.2.4 复制、出租、出售、贷款、转让、许可或意图转授、转售、分发、修改本服务任何部分或我们的任何**知识产权**(定义见下文);

2.2.5 采取可能对我们的服务器、基础设施等造成不合理的巨大负荷的任何行为;

**2.2.6 以下列任何方式或目的使用本平台服务:(i)反对宪法所确定的基本原则的;(ii)危害国家安全,泄露国家秘密,颠覆国家政权,破坏国家统一的;(iii)损害国家荣誉和利益的;(iv)煽动地域歧视、地域仇恨的;(v)煽动民族仇恨、民族歧视,破坏民族团结的;(vi)破坏国家宗教政策,宣扬邪教和封建迷信的;(vii)散布谣言,扰乱社会秩序,破坏社会稳定的;(viii)散布淫秽、色情、赌博、暴力、凶杀、恐怖或者教唆犯罪的;(ix)侮辱或者诽谤他人,侵害他人合法权益的;(x)煽动非法集会、结社、游行、示威、聚众扰乱社会秩序的;(xi)以非法民间组织名义活动的(xii) 有可能涉及版权纠纷的非本人作品的;(xiii)有可能侵犯他人在先权利的;(xiv)对他人进行暴力恐吓、威胁,实施人肉搜索的;(xv)涉及他人隐私、个人信息或资料的;(xvi)侵犯他人隐私权、名誉权、肖像权、知识产权等合法权益内容的;(xvii) 侵害未成年人合法权益或者损害未成年人身心健康的(xviii)未获他人允许,偷拍、偷录他人,侵害他人合法权利的;(xix)违反法律法规底线、社会主义制度底线、国家利益底线、公民合法权益底线、社会公共秩序底线、道德风尚底线和信息真实性底线的“七条底线”要求的;(xx)相关法律、行政法规等禁止的。**

2.2.7 绕开我们可能用于阻止或限制访问服务的措施,包括但不限于阻止或限制使用或复制任何内容或限制使用服务或其任何部分的功能;

2.2.8 试图干扰、破坏运行服务的服务器的系统完整性或安全性,或破译与运行服务的服务器之间的任何传输;

2.2.9 使用本服务发送垃圾邮件、连锁信或其他未经请求的电子邮件;

2.2.10 通过本服务传输违法数据、病毒或其他软件代理;

2.2.11 冒充他人或实体,歪曲您与某人或实体的关系,隐藏或试图隐藏您的身份,或以其他方式为任何侵入性或欺诈性目的使用本服务;或从本服务收集或获取包括用户姓名在内的任何个人信息。

2.2.12 从本服务收集或获取包括但不限于其他用户姓名在内的任何个人信息。

2.2.13 其他未经我们明示授权的行为或可能损害我们利益的使用方式。

## 3. 交互数据

**3.1** 本服务可能允许用户在注册后,基于平台使用目的在使用模型过程中与开源模型、第三方网站、软件、应用程序或服务之间进行输入、反馈、修正、加工、存储、上传、下载、分发相关个人资料信息、视频、图像、音频、评论、问题和其他内容、文件、数据和信息(**“交互数据”**)。**本平台就详细数据使用政策请见本平台的《隐私政策》。**

**3.2** 如交互数据存在任何违反法律法规或本协议的情况,我们有权利删除或停止技术服务。

**3.3** 关于您的交互数据,您确认、声明并保证:

**3.3.1 在我们要求时,您能提供交互数据中包含的个人信息或需要获得授权后才能使用的信息的来源及其合法性提供书面说明或授权;如您超出授权范围或期限时,您应自行负责获得授权范围的扩大或延期。

3.3.2 您的交互数据,以及我们根据本协议对交互数据的使用,不会违反任何适用法律或侵犯任何第三方的任何权利,包括但不限于任何知识产权和隐私权;

3.3.3 您的交互数据不包括任何被政府机构视为敏感或保密的信息或材料,且您就本服务提供的交互数据不侵犯任何第三方的任何保密权利;

3.3.4 您不会上传或通过本服务直接或通过其他方式提供14岁以下儿童的任何个人信息;

3.3.5 您的交互数据不包括裸体或其他性暗示内容;不包括对个人或团体的仇恨言论、威胁或直接攻击;不包括辱骂、骚扰、侵权、诽谤、低俗、淫秽或侵犯他人隐私的内容;不包括性别歧视或种族、民族或其他歧视性内容;不包括含有自残或过度暴力的内容;不包括伪造或冒名顶替的档案;不包括非法内容或助长有害或非法活动的内容;不包括恶意程式或程式码;不包括未经本人同意的任何人的个人信息;不包括垃圾邮件、机器生成的内容或未经请求的信息及其他令人反感的内容;**

3.3.6 据您所知,您提供给我们的所有交互数据和其他信息都是真实和准确的。

**3.4 本平台作为独立的技术支持者,您利用本平台接入大模型所产生的全部交互数据及义务和责任均由您承担,本平台不对由此造成的任何损失负责。**

**3.5 本平台作为独立的技术支持者,您利用本平台向任何第三方提供服务,相应的权利义务和责任均由您承担,本平台不对由此造成的任何损失负责。**

**3.6 免责声明。我们对任何交互数据概不负责。您将对您输入、反馈、修正、加工、存储、上传、下载、分发在本平台及模型服务上的交互数据负责并承担全部责任。本平台提供的技术服务只会严格执行您的指示处理您的交互数据,除非法律法规另有规定、依据特定产品规则另行约定或基于您的要求为您提供技术协助进行故障排除或解决技术问题,我们不会访问您的交互数据,您理解并认同我们及本平台只是作为交互数据的被动技术支持者或渠道,对于交互数据我们没有义务进行存储,亦不会对您的交互数据进行任何非授权的使用或披露。同时我们仅在合法合规的基础上且基于向您提供本平台服务的前提下使用您的交互数据。**

## 4. 知识产权

**4.1** 定义。就本协议而言,“知识产权”系指所有专利权、著作权、精神权利、人格权、商标权、商誉、商业秘密权、技术、信息、资料等,以及任何可能存在或未来可能存在的知识产权和所有权,以及根据适用法律提出的所有申请中、已注册、续期的知识产权。

**4.2** 硅基流动知识产权。您理解并承认,我们拥有并将持续拥有本服务的所有权利(包括知识产权),您不得访问、出售、许可、出租、修改、分发、复制、传输、展示、发布、改编、编辑或创建任何该等知识产权的衍生作品。严禁将任何知识产权用于本协议未明确许可的任何目的。本协议中未明确授予您的权利将由硅基流动保留。

**4.3** 输出。在您遵守如下事项且在合法合规的基础上,可以将大模型产出的结果进行使用:(i)您对服务和输出的使用不会转移或侵犯任何知识产权(包括不会侵犯硅基流动知识产权和其他第三方知识产权);(ii)如果我们酌情认为您对输出的使用违反法律法规或可能侵犯任何第三方的权利,我们可以随时限制您对输出的使用并要求您停止使用输出(并删除其任何副本);(iii)您不得表示大模型的输出结果是人为生成的;(iv)您不得违反任何模型提供商的授权许可或使用限制。

您同意,我们不对您或任何第三方声称因由我们提供的技术服务而产生的任何输出内容或结果承担任何责任。

**4.4 用户使用数据。我们可能会收集或您可能向我们提供诊断、技术、使用的相关信息,包括有关您的计算机、移动设备、系统和软件的信息(“用户使用数据”)。我们可能出于平台维护运营的需要,且在法律许可的范围内使用、维护和处理用户使用数据或其中的任何部分,包括但不限于:(a)提供和维护服务;(b)改进我们的产品和服务或开发新的产品或服务1。详细数据使用政策请见本平台的《隐私政策》。**

**4.5** 反馈。 如果您向我们提供有关本服务或任何其他硅基流动产品或服务的任何建议或反馈(**“反馈”**),则您在此将所有对反馈的权益转让给我们,我们可自由使用反馈以及反馈中包含的任何想法、专有技术、概念、技术和知识产权。反馈被视为我们的**保密信息**(定义如下)。

## 5. 保密信息

本服务可能包括硅基流动和其他用户的非公开、专有或保密信息(**“保密信息”**)。保密信息包括任何根据信息的性质和披露情况应被合理理解为保密的信息,包括非公开的商业、产品、技术和营销信息。您将:(a)至少以与您保护自己高度敏感的信息相同的谨慎程度保护所有保密信息的隐私性,但在任何情况下都不得低于合理的谨慎程度;(b)除行使您在本协议下的权利或履行您的义务外,不得将任何保密信息用于任何目的;以及(c)不向任何个人或实体披露任何保密信息。

## 6. 计费政策及税费

您理解并同意,本平台提供的部分服务可能会收取使用费用、售后费用或其他费用(**“费用”**)。您通过选择使用本服务即表示您同意您注册网站上载明的适用于您的定价和付款条款(受限于我们的不时更新的定价/付款条件/充值协议等文件),您同意我们相应监控您的使用数据以便完成本服务计费。定价、付款条件和充值协议特此通过引用并入本协议。您同意,我们可能会添加新产品和/或服务的额外费用、增加或修改现有产品和/或服务的费用,我们可能会按照您的实际使用地点设定不同的价格费用,和/或停止随时提供任何服务。未经我们书面同意或本平台有其他相关政策,付款义务一旦发生不可取消,并且已支付的费用不予退还。如存在任何政府要求的税费,您将负责支付与您的所有使用/开通服务相关的税款。若您在购买服务时有任何问题,您可以通过[contact@siliconflow.cn](mailto:contact@siliconflow.cn)联系我们。

## 7. 隐私与数据安全

**7.1** 隐私。基于您注册以及开通相关服务时主动提供给本平台的相关信息(**“用户信息”**),且为了确保您正常使用本平台的相关服务,我们可能对您提供的用户信息进行收集、整理、使用,但我们将持续遵守《中华人民共和国个人信息保护法》及相关适用法律。

**7.2** 数据安全。我们关心您个人信息的完整性和安全性,然而,我们不能保证未经授权的第三方永远无法破坏我们的安全保护措施。

## 8. 使用第三方服务

本服务可能包含非我们拥有或控制的第三方网站、资料和服务(**“第三方服务”**)的链接,本服务的某些功能可能需要您使用第三方服务。我们不为任何第三方服务背书或承担任何责任。如果您通过本服务访问第三方服务或在任何第三方服务上共享您的交互数据,您将自行承担风险,并且您理解本协议不适用于您对任何第三方服务的使用。您明确免除我们因您访问和使用任何第三方服务而产生的所有责任。

## 9. 赔偿

您将为我们及我们的子公司和关联公司及各自的代理商、供应商、许可方、员工、承包商、管理人员和董事(**“硅基流动受偿方”**)进行辩护、赔偿并使其免受因以下原因而产生的任何和所有索赔、损害(无论是直接的、间接的、偶然的、后续的或其他的)、义务、损失、负债、成本、债务和费用(包括但不限于法律费用)的损害:(a)您访问和使用本服务,包括您对任何输出的使用;(b)您违反本协议的任何条款,包括但不限于您违反本协议中规定的任何陈述和保证;(c)您对任何第三方权利的侵犯,包括但不限于任何隐私权或知识产权;(d)您违反任何适用法律;(e)交互数据或通过您的用户账户提交的任何内容,包括但不限于任何误导性、虚假或不准确的信息;(f)您故意的或者存在重大过失的不当行为;或(g)任何第三方使用您的用户名、密码或其他认证凭证访问和使用本服务。

## 10. 免责声明

**您使用本服务的风险自负。我们明确否认任何明示、暗示或法定的保证、条件或其他条款,包括但不限于与适销性、适用于特定目的、设计、条件、性能、效用、所有权以及未侵权有关的保证、条件或其他条款。我们不保证服务将不中断或无错误运行,也不保证所有错误将得到纠正。此外,我们不保证服务或与使用服务相关的任何设备、系统或网络不会遭受入侵或攻击。

通过使用本服务下载或以其他方式获得的任何内容,其获取风险由您自行承担,您的计算机系统或移动设备的任何损坏和由于上述情况或由于您访问和使用本服务而导致的数据丢失,您应承担全部责任。此外,硅基流动不为任何第三方通过本服务或任何超链接网站或服务宣传或提供的任何产品或服务提供担保、背书、保证、推荐或承担责任,硅基流动不参与或以任何方式监控您与第三方产品或服务提供商之间的任何交易。**

## 11. 责任限制和免责

硅基流动在任何情况下均不对以下损害负责:(a)间接、偶发、示范性、特殊或后果性损害;或者(b)数据丢失或受损,或者业务中断或损失;或者(c)收入、利润、商誉或预期销量或收益损失,无论是在何种法律下,无论此种损害是否因使用或无法使用软件或其他产品引起,即使硅基流动已被告知此种损害的可能性。硅基流动及其关联方、管理人员、董事、员工、代理、供应商和许可方对您承担的所有责任(无论是因保证、合同或侵权(包括疏失))无论因何原因或何种行为方式产生,始终不超过您已支付给硅基流动的费用。本协议任何内容均不限制或排除适用法律规定不得限制或排除的责任。

## 12. 适用法律及争议解决条款

本协议受中华人民共和国(仅为本协议之目的,不包括香港特别行政区、澳门特别行政区及台湾地区)法律管辖。

若在执行本协议过程中如发生纠纷,双方应及时协商解决。协商不成时,我们与您任一方均有权提请北京仲裁委员会按照其届时有效仲裁规则进行仲裁,而此仲裁规则由此条款纳入本协议。仲裁语言为中文。仲裁地将为北京。仲裁结果为终局且对双方都有约束力。

## 13. 其他条款

**13.1** 可转让性。未经我们事先明确书面同意,您不得转让或转让本协议及本协议项下授予的任何权利和许可,但我们可无限制地转让。任何违反本协议的转让或让渡均属无效。

**13.2** 可分割性。如果本协议的某一条款或某一条款的一部分无效或不可执行,不影响本协议其他条款的有效性,无效或不可执行的条款将被视作已从本协议中删除。

**13.3** 不时修订。根据相关法律法规变化及硅基流动运营需要,我们将不时地对本协议进行修改,修改后的协议将替代修订前的协议。您在使用本平服务时,可及时查阅了解。如您继续使用本服务,则视为对修改内容的同意,当发生有关争议时,以最新的用户协议为准;您在不同意修改内容的情况下,有权停止使用本协议涉及的服务。

***

1 用户使用数据不同于交互数据。交互数据不保存、不披露,具体详见《隐私政策》。↩ |

# 更新公告

Source: https://docs.siliconflow.cn/cn/release-notes/overview

### 平台服务调整通知

为了进一步优化资源配置,提供更先进和优质的技术服务,平台将于 2025 年 7 月 3 日 对下列模型进行下线处理:

* Pro/deepseek-ai/DeepSeek-R1-0120

* Pro/deepseek-ai/DeepSeek-V3-1226

* Qwen/QwQ-32B-Preview

若您正在使用上述任一模型,建议您尽快切换到其他模型,以免服务受到影响。

### 平台维护预告

为提供更加丰富、先进、优质的服务,平台将于 2025 年 6 月 10 日 23 时至 11 日 8 时进行维护。

受系统维护影响:

1. cloud.siliconflow\.cn 将**暂停**注册、登录以及包括不限于下列功能的界面操作:

* 模型在线体验/微调/批量推理;

* 官网模型广场查看模型列表及详细信息;

* 在线充值、购买等级包、查询账单、开具发票等;

2. `/user/info` API 调整,`name` / `image` / `email` 字段将不再返回,固定输出空字符串;

平台 API 服务不受维护影响,可以持续调用,建议您提前关注账户余额,以免因为余额不足导致服务受限。

### 平台服务调整通知

SiliconCloud 将启动 `DeepSeek R1` 模型更新。

对于 `deepseek-ai/DeepSeek-R1` 和 `Pro/deepseek-ai/DeepSeek-R1` 模型,将“逐步“更新到最新 `0528` 版本。

更新完成后,上述两个款模型均为 `0528` 版本。如有需求,在 `2025 年 06 月 28` 日前,您仍可以通过 `Pro/deepseek-ai/DeepSeek-R1-0120` 使用旧版模型,以更平滑地完成业务切换。

### 平台服务调整通知

为了进一步优化资源配置,提供更先进和优质的技术服务,平台将于 2025 年 6 月 5 日 对下列模型进行下线处理:

* Qwen/Qwen2-1.5B-Instruct

* Pro/Qwen/Qwen2-1.5B-Instruct

* Pro/Qwen/Qwen2-VL-7B-Instruct

* THUDM/chatglm3-6b

* internlm/internlm2\_5-20b-chat

* deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B

* Pro/deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B

若您正在使用上述任一模型,建议您尽快切换到其他模型,以免服务受到影响。

### 平台服务调整通知

为了进一步优化资源配置,提供更先进和优质的技术服务,平台将于 2025 年 4 月 29 日 对 `HunyuanVideo` 模型(`非 HunyuanVideo-HD`)模型进行下线处理。

若您正在使用该模型,建议您尽快切换其他模型,以免服务受到影响。

### 平台服务调整通知

截止目前,`Pro/deepseek-ai/DeepSeek-V3` 和 `deepseek-ai/DeepSeek-V3` 模型已经更新至最新的 0324 版本。您仍可以通过 `Pro/deepseek-ai/DeepSeek-V3-1226` 使用旧版模型,以更平滑地完成业务切换。

### 平台服务调整通知

SiliconCloud 将启动 DeepSeek V3 模型更新。

对于 `deepseek-ai/DeepSeek-V3` 和 `Pro/deepseek-ai/DeepSeek-V3` 模型,将“逐步“更新到最新 `0324` 版本。

更新完成后,上述两个款模型均为 0324 版本。如有需求,在 2025 年 4 月 30 日前,您仍可以通过 `deepseek-ai/DeepSeek-V3-1226` 使用旧版模型,以更平滑地完成业务切换。

### 平台服务调整通知

为了更好的服务全球开发者用户,SiliconCloud 即将上线国际站,并逐步开设多个服务区域。

受此调整影响,现有`api.siliconflow.com API`端点将适时回收,请您尽快切换为`api.siliconflow.cn`继续使用。

我们已经为`.cn`端点配置了全球访问加速(GTM),使其与当前的`.com`端点具有相同的全球接入体验,您只需要将 API 请求的`base URL`修改为`api.siliconflow.cn`即可。

我们建议您在`本月底(3 月 31 日)`前完成迁移,如有任何疑问,请随时联系我们。

### 平台服务调整通知

为持续提升用户体验,现调整 Rate Limits 策略如下:

去掉 deepseek-ai/DeepSeek-R1、deepseek-ai/DeepSeek-V3 的 RPH 和 RPD 限流

随着流量和负载变化,策略可能会不定时调整,硅基流动保留解释权。

### 平台服务调整通知

#### 1. 模型下线通知

为了进一步优化资源配置,提供更先进、优质、合规的技术服务,平台将于 2025 年 3 月 6 日 对部分模型进行下线处理。

具体涉及的模型列表如下:

* 对话模型

* AIDC-AI/Marco-o1

* meta-llama/Meta-Llama-3.1-8B-Instruct

* Pro/meta-llama/Meta-Llama-3.1-8B-Instruct

* meta-llama/Meta-Llama-3.1-70B-Instruct

* meta-llama/Meta-Llama-3.1-405B-Instruct

* meta-llama/Llama-3.3-70B-Instruct

* 生图模型

* black-forest-labs/FLUX.1-schnell

* Pro/black-forest-labs/FLUX.1-schnell

* black-forest-labs/FLUX.1-dev

* black-forest-labs/FLUX.1-pro

* stabilityai/stable-diffusion-xl-base-1.0

* stabilityai/stable-diffusion-3-5-large

* stabilityai/stable-diffusion-3-5-large-turbo

* stabilityai/stable-diffusion-2-1

* deepseek-ai/Janus-Pro-7B

* 语音模型

* fishaudio/fish-speech-1.5

* FunAudioLLM/SenseVoiceSmall

* fishaudio/fish-speech-1.4

* RVC-Boss/GPT-SoVITS

* 视频模型

* Lightricks/LTX-Video

* genmo/mochi-1-preview

### 平台服务调整通知

为保障平台服务质量与资源合理分配,现调整Rate Limits策略如下:

一、调整内容

1. 新增 RPH 限制(Requests Per Hour,每小时请求数)

* 模型范围:deepseek-ai/DeepSeek-R1、deepseek-ai/DeepSeek-V3

* 适用对象:所有用户

* 限制标准:30次/小时

2. 新增 RPD 限制(Requests Per Day,每日请求数)

* 模型范围:deepseek-ai/DeepSeek-R1、deepseek-ai/DeepSeek-V3

* 适用对象:未完成实名认证用户

* 限制标准:100次/天

随着流量和负载变化,策略可能会不定时调整,硅基流动保留解释权。

### 平台服务调整通知

#### 1. 模型下线通知

为了提供更稳定、高质量、可持续的服务,以下模型将于 **2025 年 02 月 27 日**下线:

* [01-ai/Yi-1.5-34B-Chat-16K](https://cloud.siliconflow.cn/models?target=01-ai/Yi-1.5-34B-Chat-16K)

* [01-ai/Yi-1.5-6B-Chat](https://cloud.siliconflow.cn/models?target=01-ai/Yi-1.5-6B-Chat)

* [01-ai/Yi-1.5-9B-Chat-16K](https://cloud.siliconflow.cn/models?target=01-ai/Yi-1.5-9B-Chat-16K)

* [stabilityai/stable-diffusion-3-medium](https://cloud.siliconflow.cn/models?target=stabilityai/stable-diffusion-3-medium)

* google/gemma-2-27b-it

* google/gemma-2-9b-it

* Pro/google/gemma-2-9b-it

如果您有使用上述模型,建议尽快迁移至平台上的其他模型。

### 平台服务调整通知

#### deepseek-ai/DeepSeek-V3 模型的价格于北京时间 2025年2月9日00:00 起恢复至原价

具体价格:

* 输入:¥2/ M Tokens

* 输出:¥8/ M Tokens

### 推理模型输出调整通知

推理模型思维链的展示方式,从之前的 `content` 中的 `` 独立成单独的单独的 `reasoning_content` 字段,兼容 `OpenAI` 和 `deepseek` api 规范,便于各个框架和上层应用在进行多轮会话时进行裁剪。使用方式详见[推理模型(DeepSeek-R1)使用](/capabilities/reasoning)。

### 平台服务调整通知

#### 支持deepseek-ai/DeepSeek-R1和deepseek-ai/DeepSeek-V3模型

具体价格如下:

* `deepseek-ai/DeepSeek-R1` 输入:¥4/ M Tokens 输出:¥16/ M Tokens

* `deepseek-ai/DeepSeek-V3`

* **即日起至北京时间 2025-02-08 24:00 享受限时折扣价**:输入:¥2¥1/ M Tokens 输出:¥8¥2/ M Tokens,2025-02-09 00:00恢复原价。

### 平台服务调整通知

#### 生成图片及视频 URL 有效期调整为 1 小时

为了持续为您提供更先进、优质的技术服务,从 2025 年 1 月 20 日起,大模型生成的图片、视频 URL 有效期将调整为 1 小时。

若您正在使用图片、视频生成服务,请及时做好转存工作,避免因 URL 过期而影响业务。

### 平台服务调整通知

#### LTX-Video 模型即将开始计费通知

为了持续为您提供更先进、优质的技术服务,平台将于 2025 年 1 月 6 日起对 Lightricks/LTX-Video 模型的视频生成请求进行计费,价格为 0.14 元 / 视频。

### 平台服务调整通知

#### 1. 模型下线通知

为了提供更稳定、高质量、可持续的服务,以下模型将于 **2024 年 12 月 19 日**下线:

* [deepseek-ai/DeepSeek-V2-Chat](https://cloud.siliconflow.cn/models?target=deepseek-ai/DeepSeek-V2-Chat)

* [Qwen/Qwen2-72B-Instruct](https://cloud.siliconflow.cn/models?target=Qwen/Qwen2-72B-Instruct)

* [Vendor-A/Qwen/Qwen2-72B-Instruct](https://cloud.siliconflow.cn/models?target=Vendor-A/Qwen/Qwen2-72B-Instruct)

* [OpenGVLab/InternVL2-Llama3-76B](https://cloud.siliconflow.cn/models?target=OpenGVLab/InternVL2-Llama3-76B)

如果您有使用上述模型,建议尽快迁移至平台上的其他模型。

### 平台服务调整通知

#### 1. 模型下线通知

为了提供更稳定、高质量、可持续的服务,以下模型将于 **2024 年 12 月 13 日**下线:

* [Qwen/Qwen2.5-Math-72B-Instruct](https://cloud.siliconflow.cn/models?target=Qwen/Qwen2.5-Math-72B-Instruct)

* [Tencent/Hunyuan-A52B-Instruct](https://cloud.siliconflow.cn/models?target=Tencent/Hunyuan-A52B-Instruct)

如果您有使用上述模型,建议尽快迁移至平台上的其他模型。

如果您有使用上述模型,建议尽快迁移至平台上的其他模型。

### 平台服务调整通知

#### 1. 模型下线通知

为了提供更稳定、高质量、可持续的服务,以下模型将于 **2024 年 11 月 22 日**下线:

* [deepseek-ai/DeepSeek-Coder-V2-Instruct](https://cloud.siliconflow.cn/models?target=deepseek-ai/DeepSeek-Coder-V2-Instruct)

* [Qwen/Qwen2-57B-A14B-Instruct](https://cloud.siliconflow.cn/models?target=Qwen/Qwen2-57B-A14B-Instruct)

* [Pro/internlm/internlm2\_5-7b-chat](https://cloud.siliconflow.cn/models?target=Pro/internlm/internlm2_5-7b-chat)

* [Pro/THUDM/chatglm3-6b](https://cloud.siliconflow.cn/models?target=Pro/THUDM/chatglm3-6b)

* [Pro/01-ai/Yi-1.5-9B-Chat-16K](https://cloud.siliconflow.cn/models?target=Pro/01-ai/Yi-1.5-9B-Chat-16K)

* [Pro/01-ai/Yi-1.5-6B-Chat](https://cloud.siliconflow.cn/models?target=Pro/01-ai/Yi-1.5-6B-Chat)

如果您有使用上述模型,建议尽快迁移至平台上的其他模型。

#### 2.邮箱登录方式更新

为进一步提升服务体验,平台将于 **2024 年 11 月 22 日起**调整登录方式:由原先的“邮箱账户 + 密码”方式更新为“**邮箱账户 + 验证码**”方式。

#### 3. 新增海外 API 端点

新增支持海外用户的平台端点:[https://api-st.siliconflow.cn](https://api-st.siliconflow.cn)。如果您在使用源端点 [https://api.siliconflow.cn](https://api.siliconflow.cn) 时遇到网络连接问题,建议切换至新端点尝试。

### 部分模型计价调整公告

为了提供更加稳定、优质、可持续的服务,[Vendor-A/Qwen/Qwen2-72B-Instruct](https://cloud.siliconflow.cn/models?target=17885302571) 限时免费模型将于 2024 年 10 月 17 日开始计费。计费详情如下:

* 限时折扣价:¥ 1.00 / M tokens

* 原价:¥ 4.13 / M tokens(恢复原价时间另行通知)

# 社区场景与应用

Source: https://docs.siliconflow.cn/cn/usercases/awesome-user-cases

# SiliconCloud 场景与应用案例

将 SiliconCloud 平台大模型能力轻松接入各类场景与应用案例

{/* ### 1. 应用程序集成 */}

{/* */}

{/*

SiliconCloud 团队

SiliconCloud 团队

SiliconCloud 团队

SiliconCloud 团队

*/}

{/*

Cherry Studio 团队

Chatbox 团队

*/}

{/* */}

### 1. 翻译场景使用

SiliconCloud 团队

沧海九粟

ohnny\_Van

mingupup

行歌类楚狂

### 2. 搜索与 RAG 场景使用

MindSearch 团队

LogicAI

白牛

沧海九粟

### 3. 编码场景使用

xDiexsel

野原广志2\_0

### 4. 分析场景使用

行歌类楚狂

### 5. 通讯场景使用

大大大维维

### 6. 生图场景使用

mingupup

baiack

糯.米.鸡

### 7. 使用评测

湖光橘鸦

自牧生

### 8. 开源项目

郭垒

laughing

### 9. 其它

mingupup

郭垒

free-coder

三千酱

# 302.AI

Source: https://docs.siliconflow.cn/cn/usercases/use-siliconcloud-in-302ai

## 1. 关于302.AI

302.AI是一个按需付费的AI应用平台,提供丰富的AI在线应用和全面的AI API接入。

302.AI和硅基流动进行合作,让302.AI的用户可以在302的平台内直接使用硅基流动的所有模型以及开箱即用的AI应用;也能让硅基流动的用户可以在302的平台内自定义接入硅基流动模型API,无需自己开发或部署。

## 2. 硅基流动用户如何在302.AI使用 SiliconCloud 模型

### 2.1 接入聊天机器人进行对话

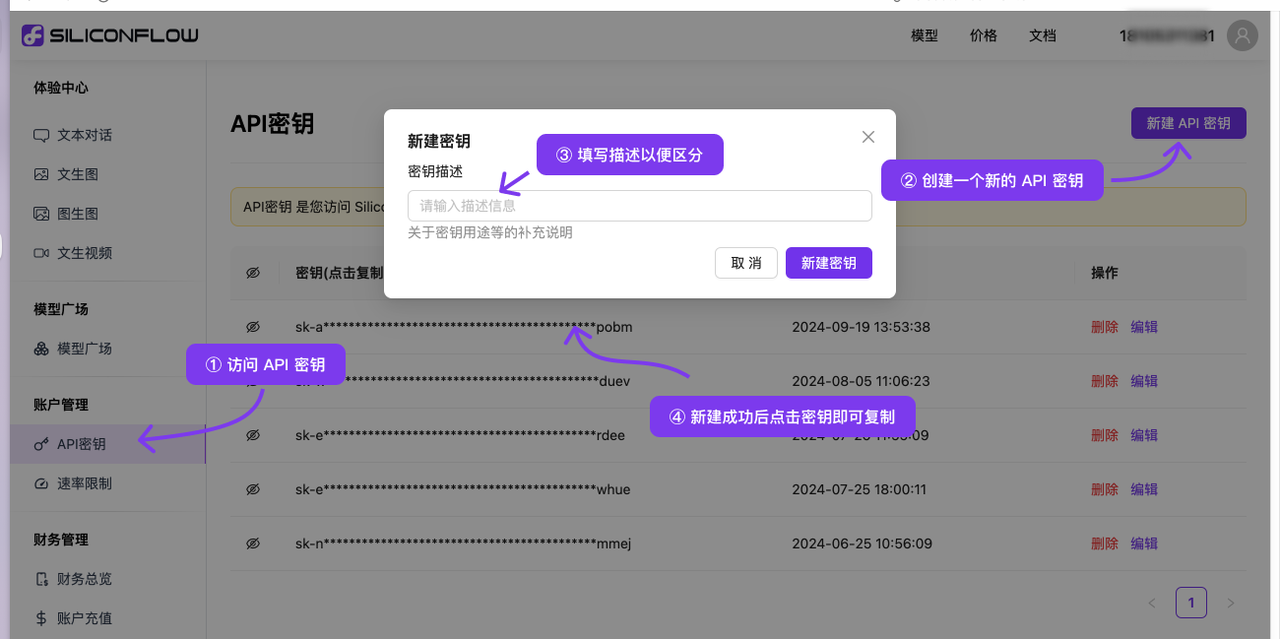

#### 2.1.1 获取 SiliconCloud 的API Key 和模型 ID

(1)打开 SiliconCloud 官网 并注册账号(如果注册过,直接登录即可)。

(2)完成注册后,打开API密钥,创建新的 API Key,点击密钥进行复制,以备后续使用。

(3)在模型广场找到需接入的模型ID备用。

#### 2.1.2 设置自定义模型

(1)依次点击: **使用机器人 → 聊天机器人 → 模型**。

(2)点击左下角的自定义模型,填写相关配置信息。

(2)点击左下角的自定义模型,填写相关配置信息。

(3)示例模型:硅基流动部署的Qwen/Qwen2.5-7B-Instruct:

* API base选择硅基流动。

* 填写API Key、模型ID等,并根据模型特性进行相应配置;

* 点击【检查】按钮,一键检测信息是否填写正确(示例绿标即为通过);

* 按需选择中转地区(中转地区选择功能可以解决部分模型提供商因为IP地区限制访问的问题);

* 可设置备用模型,当自定义模型失效时可以自动切换到备用模型;

* 所有配置完成后,点击【确认】按钮;

(3)示例模型:硅基流动部署的Qwen/Qwen2.5-7B-Instruct:

* API base选择硅基流动。

* 填写API Key、模型ID等,并根据模型特性进行相应配置;

* 点击【检查】按钮,一键检测信息是否填写正确(示例绿标即为通过);

* 按需选择中转地区(中转地区选择功能可以解决部分模型提供商因为IP地区限制访问的问题);

* 可设置备用模型,当自定义模型失效时可以自动切换到备用模型;

* 所有配置完成后,点击【确认】按钮;

#### 2.1.3 创建聊天机器人

(1)接入成功后的模型会在自定义模型分类中显示,选中模型并点击【确认】;

(2)点击【创建聊天机器人】按钮:

#### 2.1.3 创建聊天机器人

(1)接入成功后的模型会在自定义模型分类中显示,选中模型并点击【确认】;

(2)点击【创建聊天机器人】按钮:

(3)进入聊天机器人后发送消息即可开启对话;

(3)进入聊天机器人后发送消息即可开启对话;

(所有模型均支持联网搜索、图片分析、深度思考等能力)

#### 2.1.4 自定义模型价格

开启自定义模型的聊天机器人,每创建一个机器人每天收费0.05 美金 ,按天扣费。

模型使用费用,将在SiliconCloud的账号余额扣取。

### 2.2. 接入API实现功能扩展

#### 2.2.1 创建API Key

(1)首先我们需要创建一个支持中转自定义模型的API key。

详细步骤:**使用 API → API Keys → 输入 API 名称 → 开启自定义模型中转 → 【添加 API KEY】**

(所有模型均支持联网搜索、图片分析、深度思考等能力)

#### 2.1.4 自定义模型价格

开启自定义模型的聊天机器人,每创建一个机器人每天收费0.05 美金 ,按天扣费。

模型使用费用,将在SiliconCloud的账号余额扣取。

### 2.2. 接入API实现功能扩展

#### 2.2.1 创建API Key

(1)首先我们需要创建一个支持中转自定义模型的API key。

详细步骤:**使用 API → API Keys → 输入 API 名称 → 开启自定义模型中转 → 【添加 API KEY】**

(2)复制生成的API Key备用;

(2)复制生成的API Key备用;

(3)查看中转后自定义模型的名称以方便调用:

(3)查看中转后自定义模型的名称以方便调用:

#### 2.2.2 为模型增加新的功能

通过302中转的模型,可以新增一些模型本身不具备的功能

目前支持的有:

联网搜索、深度搜索、图片分析、推理模式、链接解析、工具调用、长期记忆

(点击文字可直达相关文档,根据文档说明使用即可,持续更新中)

以增加自定义模型联网搜索能力为例:

(1)进入相关文档后,点击【调试】按钮:

#### 2.2.2 为模型增加新的功能

通过302中转的模型,可以新增一些模型本身不具备的功能

目前支持的有:

联网搜索、深度搜索、图片分析、推理模式、链接解析、工具调用、长期记忆

(点击文字可直达相关文档,根据文档说明使用即可,持续更新中)

以增加自定义模型联网搜索能力为例:

(1)进入相关文档后,点击【调试】按钮:

(2)点击“去设置变量值”,粘贴生成的API Key填并点击【保存】:

(注意:模型中转功能必须手动开启,因此需要手动粘贴对应的API Key)